HealthManagement, Volume 21 - Issue 3, 2021

Ethical consideration of Greatness and Limits of data-driven smart medicine

“The development of full artificial intelligence could spell the end of the human race. It would take off on its own, and re-design itself at an ever-increasing rate. Humans, who are limited by slow biological evolution, couldn’t compete and would be superseded.”

Stephen Hawking, BBC, December 2014

“Our intelligence is what makes us human, and AI is an extension of that quality.”

Yann LeCun, VP and Chief AI Scientist, Facebook

“I don’t have a life. I have a programme.”

The Doctor, an Emergency Medical Hologram Mark, Voyager

Digitalisation of medicine and healthcare is, apparently, the not so distant future. But to make it practically successful, we need to explore and understand AI and its interaction with data use and protection policies. An ethics expert looks into the challenges imminent to the current digital health landscape and outlines the benchmarks for its transition to ‘common good’.

Key Points

- While technology has always been used to progress medicine, its moral values should be critically evaluated, especially considering the potential impact of AI and data.

- In Germany, finding the balance between good use of good data and protection of personal data is challenging. In theory, policies are on the right track but their implementation may be questionable or even the opposite of what was intended.

- Present digital landscape might not always be amenable to consensus so in real-life settings an expert ethical evaluation of new technologies should come to the forefront.

- Between public and private health concerns, justice and autonomy, the common good should prevail as the critical point of AI and data-model implementation in medicine and healthcare.

The Ultimate Seduction or Redemption?

“Two things fill the mind with ever new and increasing wonder and awe” – the stunning human-like AI, often called artificial general intelligence (AGI), we created and the decisions that are finally being taken away from us, especially in ethical matters. Well, something like that. Kant meant: “the starry heavens above me and the moral law within me.” The original Kantian power-quote from conclusion of The Critique of Practical Reason hits the point. True Heaven is the moral law.

The existential question of healing, of redemption from illness and torment is so close to all of us that almost any means may justify this end at first glance. But only almost. In the long history of medicine, technology has always been a popular instrument for achieving progress. Progress in a profession between science, art, ethics and craft. Only the craft is really interchangeable with digital technologies, to a large extent and only insofar as it is interpreted manually. But even there not completely, because as long as we humans are bodily beings, empathetic touch is also an expression of a professional closeness and a relation which itself can develop a positive medical power. Of course, no doctor will mourn the old procedures in which urine had to be tasted – diagnostically imprecise and burdened with shame for both doctor and patient. And yet technology is not in principle simply an instrument; rather, it is closely interwoven with the ethical quality of medicine itself and must therefore also be addressed from the point of view of values.

AI makes it particularly clear at this point how much the deep chances of positive progress in medicine itself can be morally commanded to be used, on the one hand, but on the other hand, should also be critically questioned. Between seduction and redemption. Perhaps the AGI will play the central role in the future, assuming that this is possible in principle (which is probably the case, Gödel‘s theorems a no a priori limit). It has already become impressively clear, even more so in pandemic times, that successful public and private health can no longer be guaranteed or at least legitimately supported by Analogicity. Data, AI and me and you. And all of us. Everywhere.

No Medicine Without Good Data

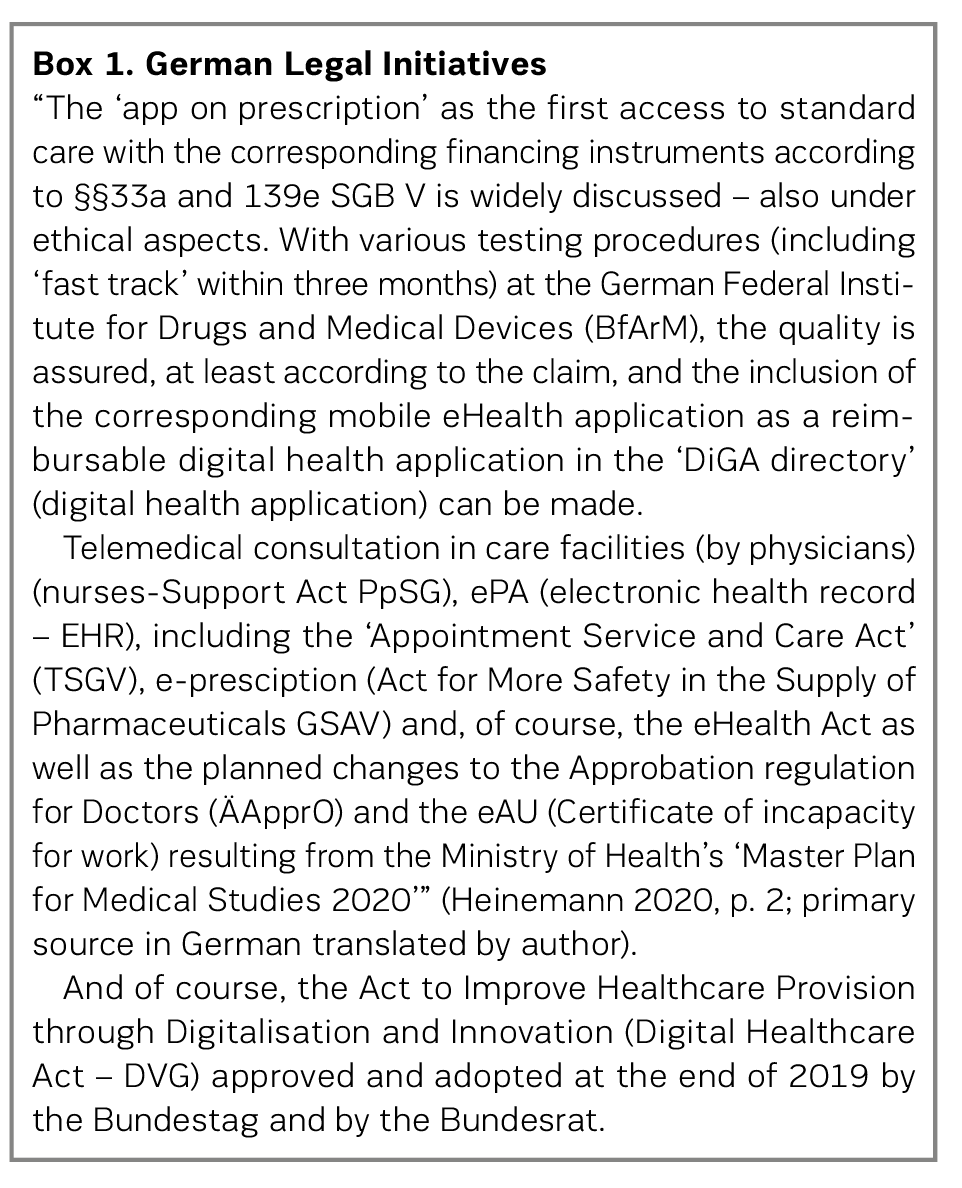

It is hard to grasp, even harder to bear. How can a successful, highly industrialised democracy like Germany in the middle of Europe be so clearly overwhelmed politically and administratively in the corona crisis? There may be many reasons for this, which cannot be discussed here (cf. Heinemann and Richenhagen 2021); however, at least one element is to be found in the lack of digitisation of the public health system. Without good data (valid, etc.), there can be no good pandemic prevention (and also no further diagnostics, therapy and aftercare). Without good algorithms, i.e. good AI, no smart use of this data. So far, so good. Or not. Because: the German fear of the data octopi (think tech corps) unfortunately ultimately ensures the weakening and endangerment of the basic idea of a solidarity-based healthcare system such as in Germany, which is actually legitimately worth protecting – not ‘only’ in the corona times. Developing and protecting the common good does not succeed against, but only with data and AI. But responsibly, with secure and protected, above all personal, data. The current data strategy of the German government shows good perspectives here (Bundeskanzleramt 2021). The German Ethics Council had already recommended ‘data donations’ as a sensible system supplement in 2017 (ibid.), especially for research and medicine. All these initiatives are good and right, but they do not have nearly the impact that would be necessary to manage a pandemic. Even the sensible legal initiatives of the last two and a half years, starting from the Federal Ministry of Health (Box 1), admittedly could not make up for many years of digital backlogs in medicine and the healthcare industry. But the concern that in the end the many good foundations will not have sufficient effect is not unfounded. At this point, there is a risk of a massive loss of credibility for politics as a whole, of not being able to mediate adequately between protection and freedom and of having too little outcome. Article 1 (1), (3) of the GDPR actually formulates an enabling of data use.

In the current report of the German Expert Council, the strategic section correctly states:

“A patient-centric approach will simultaneously facilitate the meaningful development and use of future digital applications in healthcare. In this context, particular attention must be paid to personal rights and individual security needs. The protection of informational self-determination by means of data security measures, as well as substantive data protection law, are structured in Germany with great regulatory depth and regulatory density. In the process, a strongly pronounced one-sidedness of interpretation of data protection has developed in the sense of minimising the processing and further transmission of data. This interpretation, particularly in the form of the ‘data economy’ principle, is based on the unquestioned assumption that misuse of the processed data represents the greatest risk for patients. The significant risks to life and health of not processing data, on the other hand, are often underestimated as minimal or non-existent. Data protection in the healthcare system should protect not only data, but at the same time and above all the life and health of patients. This protection is a necessary prerequisite for being able to exercise self-determination, including informational self-determination, at all” (Sachverständigenrat zur Begutachtung der Entwicklung im Gesundheitswesen 2021, p. 711; primary source in German translated by author).

It is understandable that data protectionists are placing data protection at the forefront of their efforts somewhat more clearly than perhaps other players. However, in view of digital medicine and the healthcare industry and thus the future of medicine and the healthcare industry in general, and even more so in view of the sad developments of the pandemic in Germany in particular, it seems to be becoming clear that data protection in the way it is interpreted and practised may itself be subject to increasingly critical scrutiny, given the financial and technological possibilities that Germany actually has or should have. The author himself has a hard time with this finding, because as an ethicist, autonomy, as it is valued and promoted in the GDPR, is very important and central, and we read right at the beginning of the GDPR that it is, of course, not about obstacles or barriers, but actually just the opposite. However, the de facto situation is that data protection, while certainly not always justified, has meanwhile commonly come into a critical light. On the one hand, this is not entirely harmless, because if it becomes too critical, one could gamble away the actually good basic facilities of the GDPR through inadequate implementation. On the other hand, it is equally dangerous, because the possibilities that are undoubtedly associated with data, especially in medicine, must not be gambled away without necessity – and this can only be meant without absolutely first-rate and clear arguments as to when the privacy of persons in the broader context that is actually to be protected is to be preferred to health in the broader context (or even in the specific context).

It is true, of course, that the much-maligned GDPR allows for much more and offers many more solutions than most people are aware of, but only for those who are familiar with these solutions. For the majority of professional players in medicine and the healthcare industry, and even more so for patients and their relatives, it is at best a nebulous piece of legislation whose effects are often perceived as a problem in practice and which, moreover, punishes violations with very high penalties. Ultimately, data protection in the form in which it is often lived in Germany is a clear overreach. From day care centres to university clinics, there are hardly any opportunities left not to immediately think of difficulties when it comes to personal data. Which, as I said, is not always fair to data protection, but on the other hand, it is because a law needs not only a good ratio legis but also a correspondingly transparent and feasible implementation dimension. Of course, there are other areas of law that are complex and legal frameworks that are difficult, but they do not affect everyone and certainly not everyone’s existence. The right basic idea is to set up data protection in such a way that it gives every person the chance of sovereignty over their own data, limits the possibility of radical data monopolies by large Internet corporations, and also prevents something like a ‘Health Schufa’ (Schufa is a German private credit bureau ). De facto, this good basic idea is mostly settled by a few clicks, with corresponding more or less effective consents, and checking these corresponding provisions is hardly to be done by the corresponding agencies due to the mass. It is ethically quite critical to ask whether a construct, which factually already contains a real illegality perspective for a normal justifiable action, can still be meaningful. And, moreover, it makes its own ratio legis appear impracticable.

Veil’s (2020) criticism that a ‘one-size-fits-all’ approach to the GDPR does not do justice to the subject matter can rightly be followed. The tax authorities are certainly to be evaluated differently than a blogger and multinational corporations or the craft business around the corner. The person processing the data would have to be reconsidered in their own power and risk as well as benefit of the processing. The narrow focus on personal data in the sense of the GDPR is too undifferentiated and cannot distinguish the in reality very different protection needs and processing risks. Data are not objects, and the structure of data protection law in the EU does not allow any consideration of which specific use of which data should or should not be permissible. With this prohibition principle, even ethically desirable and even fundamentally protected processing of data is subject to constant justification and always on the border of illegality. So, what exactly does the GDPR protect? As long as this question cannot be answered clearly – ideally in a meaningful form as indicated – the interpretation will always remain problematic. Ultimately, data protection does not become the protection of data where it would be justified and appropriate. At the operational level, so to speak, data protection understood in this way turns life, the profession and ultimately everything into a risk-prevention matter. As if there could be no one on a private or professional level who did not want to comply with rules that were already in place before the GDPR. Data protection thus threatens to become a self-contradiction.

In addition, the data economic perspective will become increasingly important: how can and will patients participate in a possible economic perspective of ‘their’ data? This question will be asked more strongly, even if no ownership of data is considered justifiable as of today (with good arguments, cf. Hummel et al. (2020) as well as Data Ethics Commission of the Federal Government (Datenethikkommission der Bundesregierung 2019)) – licensing models (Kerber 2016) as tested for decades in the media industry could form a bridge.

The Doctor Is In

The mediation of legitimate healing interests with justified concerns about dehumanised medicine, driven by minimal economic calculations, is the main task in medicine and the healthcare industry in the 21st century. Especially the data-driven use of AI, in this case, of course, ANI (Artificial Narrow Intelligence), is very impressive as far as the use cases in medicine are concerned, not everywhere but in many fields of application, and gives hope to many people but also professionals. However, in the closer context of concrete use cases, ethical considerations are substantial, as Morley and Floridi (2020) have elaborated (Table 1). In this context, a somewhat different logic of values is used as a basis for the digital public health sector than in Table 1, but the concerns are nevertheless comparable.

In particular, it is about algorithms, their development and application logic and their ethical evaluation. At first glance, it is clear that a kind of nomenclature of differentiated ethical issues is required, as well as intensive expertise in the field of digital medicine and the healthcare industry, in order to arrive at justifiable ethical conclusions. It is easy to imagine that since ethical values, their validity and justification have always been and continue to be the subject of struggle, and since the intricacies of the digital transformation are not always amenable to consensus despite their scientific basis, such a conclusion is not always easy to reach consensually. For the private health of each individual and the further development of the professions, the question of the ethical evaluation of digital innovation in medicine and the healthcare industry will become central.

Digital Public Health Meets Ethics

Of course, digital public health is no more free of fundamental ethical questions than digital medicine and the healthcare industry are at the individual level, for example in the doctor-patient (AI) relationship. The medical ethicist Marckmann (2020) lists eleven ethical criteria for assessing public health interventions (Table 2).

This list makes clear already in the first access that value conflicts arise, and with those also the well-known solution challenges; one thinks of the middle principles of Beauchamp and Childress (2001) which also find application with Marckmann. In the end, it remains methodically comprehensible but logically unsatisfactory how the four principles can be clearly weighed against each other in materially rich cases in practice – consensus usually works better under ideal conditions than under real ones.

In any case, the relationship between private and public health is particularly tense; in the pandemic, we learn that not every person is able to recognise their own health in the health of others. Various criteria named by Marckmann are challenging in justification and implementation, especially justice and autonomy can be mentioned here. Autonomy presupposes much for the individual, justice for the many. The impending digital health divide affects the public sector in particular. If inclusion in schools is already hardly successful, what will be the impact of ineffective digital inclusion in healthcare?

In the case of health data in the sense of public health, it is particularly important that every person can trust the state to use their own data only for the common good. This is already a prerequisite. In addition, the concept of sovereignty is a convincing theoretical illustration of the protection of the each individual’s privacy with the opportunities for medicine as a whole and for the individual in particular, but in practice, as is becoming increasingly apparent, it is extremely difficult to implement.

An AI that is used responsibly in medicine is not ‘a ring to rule them all’, but a sharp sword which should be used very consciously; but then also really used and not pettily talked down by the naysayers. Ethics is the absence of pettiness and the presence of rational argumentation that does not confuse the emotions with one but also does not forget, because: the Good should have an impact in our world. So, it is in the end also true with the smart medicine.

Conflict of Interest

The author states that no conflict of interest exists. For this article the author has not used any studies on humans or animals.

References:

Beauchamp TL, Childress JF (2001) Principles of biomedical ethics (5th ed.). New York, NY: Oxford University Press.

Bundeskanzleramt (Ed.) (2021) Datenstrategie der Bundesregierung – Eine Innovationsstrategie für gesellschaftlichen Fortschritt und nachhaltiges Wachstum- Kabinettfassung [Data strategy of the federal government - An innovation strategy for social progress and sustainable growth – Cabinet version]. Published 27 January. Available from https://www.bundesregierung.de/resource/blob/992814/1845634/f073096a398e59573c7526feaadd43c4/datenstrategie-der-bundesregierung-download-bpa-data.pdf

Datenethikkommission der Bundesregierung (2019) Gutachten der Datenethikkommission der Bundesregierung [Report of the Federal Government's Data Ethics Commission]. Available from: https://www.bmi.bund.de/SharedDocs/downloads/DE/publikationen/themen/it-digitalpolitik/gutachten-datenethikkommission.pdf

Deutscher Ethikrat (2017) Big Data und Gesundheit – Datensouveränität als informationelle Freiheitsgestaltung – Stellungnahme [Big data and health – data sovereignty as the shaping of informational freedom – statement]. Available from https://www.ethikrat.org/fileadmin/Publikationen/Stellungnahmen/deutsch/stellungnahme-big-data-und-gesundheit.pdf

Garattini C et al. (2019) Big Data Analytics, Infectious Diseases and Associated Ethical Impacts. Philosophy & Technology, 32(1):69-85.

Hailu R (2019). Fitbits and other wearables may not accurately track heart rates in people of color. STAT. Published on 24 July. Available from https://www.statnews.com/2019/07/24/fitbit-accuracy-dark-skin/

Heinemann S (2020) Einführung [Introduction]. In: Matusiewicz D et al. (Eds.): Digitale Medizin – Kompendium für Studium und Praxis [Digital medicine - compendium for study and practice], pp. 1-32. Berlin: MWV.

Heinemann S, Richenhagen G (2021) Corona-Fitness als gesellschaftliche Chance [Corona fitness as a social opportunity]. Preprint, published 19 January. Available from https://www.researchgate.net/deref/http%3A%2F%2Fdx.doi.org%2F10.13140%2FRG.2.2.16602.57289

Hummel P et al. (2020) Own Data? Ethical Reflections on Data Ownership. Philos. Technol. Available from: https://doi.org/10.1007/s13347-020-00404-9

Kerber W (2016) Digital markets, data, and privacy: competition law, consumer law, and data protection. Journal of Intellectual Property Law & Practice, 11(11):856–866

Kleinpeter E (2017) Four Ethical Issues of “E-Health”. IRBM, 38.

Liu C et al. (2018) Using Artificial Intelligence (Watson for Oncology) for Treatment Recommendations Amongst Chinese Patients with Lung Cancer: Feasibility Study. J Med Internet Res [Internet], 20(9):e11087.

Marckmann G (2020) Ethische Fragen von Digital Public Health [Ethical issues in digital public health], in: Bundesgesundheitsbl, 63:199-205.

Morley J, Floridi L (2020). An ethically mindful approach to AI for health care. The Lancet, 395(10220), 254-255.

Racine E et al. (2019) Healthcare uses of artificial intelligence: Challenges and opportunities for growth. Healthcare Management Forum, 32(5):272-275.

Sachverständigenrat zur Begutachtung der Entwicklung im Gesundheitswesen (2021) Digitalisierung für Gesundheit Ziele und Rahmenbedingungen eines dynamisch lernenden Gesundheitssystems – Gutachten 2021 [Digitisation for Health Goals and framework conditions of a dynamically learning health system – Report 2021]. Available from https://www.svr-gesundheit.de/fileadmin/Gutachten/Gutachten_2021/SVR_Gutachten_2021_online.pdf

Veil W (2020) Wie auch die Gesundheit unter dem Datenschutzrecht leidet [Just as health suffers from data protection law]. In: Heinemann S, Matusiewicz D (eds.) (2020): Digitalisierung und Ethik in Medizin und Gesundheitswesen [Digitisation and ethics in medicine and healthcare], pp. 193-202. Berlin: MWV.

Wachter RM (2015) The digital doctor: hope, hype, and harm at the dawn of medicine's computer age. New York, NY: McGraw-Hill Education.