Computed Tomography (CT) technology has seen remarkable advancements over the last few decades, significantly impacting medical imaging. From the advent of helical CT in the 1990s to the introduction of artificial intelligence-based image reconstruction techniques in recent years, these developments have enhanced image quality while also addressing concerns about radiation exposure. This article explores the evolution of CT technology, focusing on dose reduction techniques, the impact of artificial intelligence on image reconstruction, and the ongoing challenges in standardising image quality and radiation doses across different CT systems and vendors.

The Evolution of CT Technology: From Helical to Multi-Detector Scanners

The introduction of helical CT revolutionised medical imaging by enabling continuous volume acquisition, which was a significant improvement over the traditional step-and-shoot method. This innovation laid the groundwork for subsequent advancements, particularly in multi-detector CT (MDCT) scanners. MDCT scanners allowed the acquisition of multiple thin slices simultaneously, greatly enhancing spatial resolution and reducing scan times. This capability was crucial for detailed imaging, particularly in complex areas like the chest and abdomen.

With the increased use of CT imaging, dose reduction became a critical focus. Techniques such as automatic tube current modulation (ATCM) and automatic kilo voltage selection (ATVS) were developed to adjust radiation doses according to each patient's specific needs, taking into account factors like patient size and the homogeneity of the scanned area. These advancements not only improved patient safety by reducing radiation exposure but also maintained the high quality of diagnostic images necessary for accurate assessments.

Artificial Intelligence in CT: Deep Learning Image Reconstruction

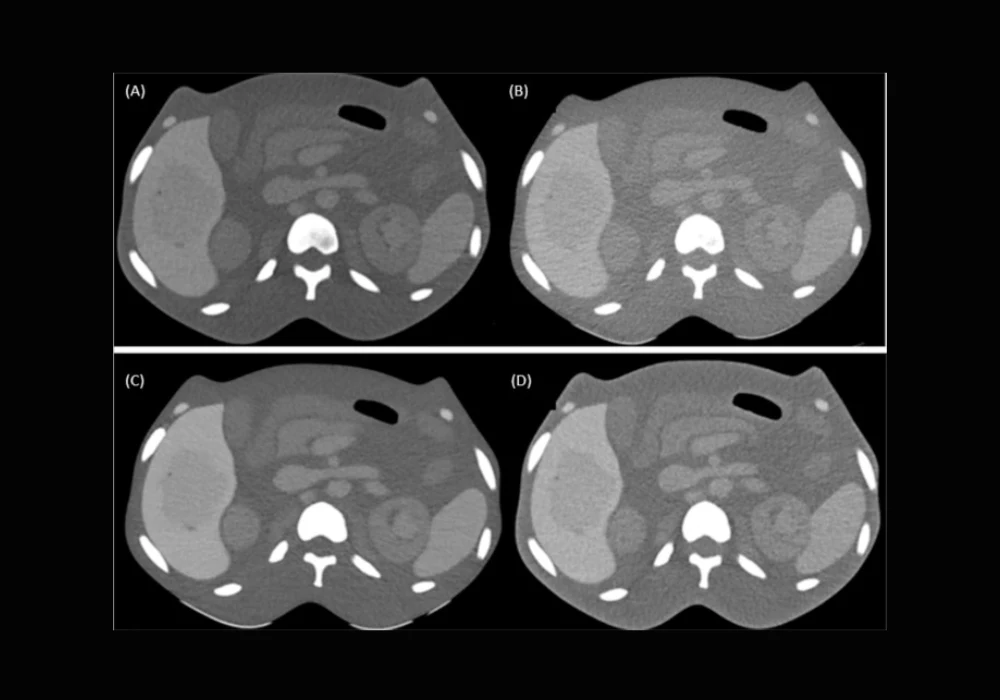

The most recent leap in CT technology involves the integration of artificial intelligence (AI) in image reconstruction, specifically through Deep Learning Image Reconstruction (DLIR). Introduced commercially in 2019, DLIR utilises neural networks to enhance image quality by improving texture, detectability of low-contrast features, and spatial resolution while reducing noise. Unlike traditional iterative reconstruction (IR) methods, which often result in artefacts and blurring at higher strengths, DLIR offers a more refined approach that preserves anatomical detail without increasing radiation doses.

This advancement is particularly significant in abdominal CT scans, where the challenge lies in visualising small or low-contrast lesions. DLIR can achieve high-quality images at lower doses, which is critical for patient safety, especially in populations requiring frequent scans, such as cancer patients. The technology's ability to produce clearer images with less noise also aids radiologists in making more accurate diagnoses, potentially catching issues that might be missed with lower-quality images.

Standardisation Challenges: Variability Across Vendors and Scanners

Despite these technological advancements, image quality and radiation dose standardisation remain challenging. Differences in hardware and software solutions between vendors, as well as variations in scanner models and local protocols, contribute to inconsistencies in CT imaging. This issue is compounded by the fact that many radiology departments have different practices and preferences, affecting how dose reduction techniques are implemented and how image quality is assessed.

For instance, variations in beam geometry, filtration, and ATCM settings can lead to significant differences in image quality and radiation doses, even for the same type of scan. The lack of standardised protocols can result in unnecessary radiation exposure or suboptimal image quality, impeding accurate diagnosis. This variability underscores the need for a more unified approach to CT imaging that balances dose optimisation with consistently producing high-quality diagnostic images.

The advancements in CT technology, from helical CT to DLIR, have significantly improved the capability of medical imaging to provide detailed and accurate diagnostic information while managing patient safety concerns related to radiation exposure. However, the journey toward standardising image quality and radiation doses across different vendors and scanner models is ongoing. As CT technology continues to evolve, the medical community must establish comprehensive guidelines and protocols that consistently deliver high-quality images at the lowest possible doses. Further research and collaboration among radiologists, medical physicists, and equipment manufacturers are essential to achieve these goals and harness advanced CT technologies' full potential in clinical practice.

Source & Image Credit: European Journal of Radiology