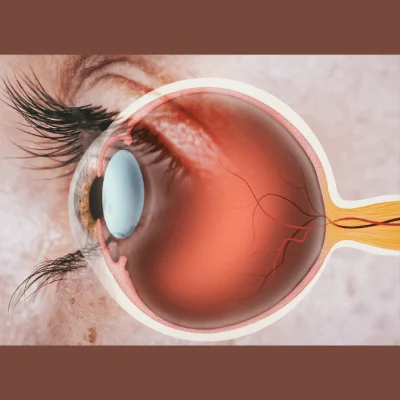

In vivo ophthalmic imaging offers insight into individual cell status, but noise reduces contrast, prolonging acquisition time and risking artefacts. Adaptive optics optical coherence tomography (AO-OCT) enables high-resolution retinal imaging but suffers from speckle noise, hindering cellular visualisation. Current methods require averaging multiple volumes, increasing acquisition time and patient discomfort. AI, particularly generative adversarial networks (GANs), presents a solution. By training a custom GAN framework, termed parallel discriminator GAN (P-GAN), to recover cellular structures from single, noisy AO-OCT images, the need for multiple acquisitions is eliminated. A study recently published in Nature Communications Medicine offers some promise to drastically improve throughput in ophthalmic imaging, potentially revolutionising the field.

Study Design and Development of P-GAN Architecture

Between 2019 and 2022, participants without ocular disease were enrolled for the study. They underwent thorough eye exams, and eight eyes from seven healthy individuals (average age: 29.1 ± 9.1 years) were imaged using a specialised AO-OCT retinal imager at the National Eye Institute Eye Clinic in Maryland, USA. Participants' eyes were dilated with 2.5% phenylephrine hydrochloride and 1% tropicamide. The study aimed to develop a method to enhance retinal cellular structure visualisation from speckled images using deep learning. Inspired by previous successes, the researchers devised a parallel discriminator GAN (P-GAN) architecture. This network, comprising twin and CNN discriminators, was designed to faithfully recover both local cell details and overall cell patterns. With a weighted feature fusion strategy, the twin discriminator effectively compared complex cellular structures. P-GAN successfully recovered retinal cellular structure, showing similarity to averaged images. Qualitatively, P-GAN outperformed other deep learning networks, exhibiting clearer visualisation and fewer artefacts. Quantitative metrics confirmed P-GAN's superiority, with improved perceptual similarity and structural correlation compared to off-the-shelf networks.

P-GAN's Success in Recovering Retinal Cellular Structures

The study showcased the efficacy of P-GAN in recovering retinal cellular structures from speckle-obscured AO-OCT images of the RPE. By establishing a mapping between single-speckled and averaged images, P-GAN eliminates the necessity for the sequential volume averaging process traditionally employed in AO-OCT RPE imaging. This development holds significant implications for routine clinical application, particularly in enabling morphometric measurements of cell structure across various retinal locations. The success of P-GAN lies in its unique architecture, particularly its twin discriminator inspired by Siamese networks, which assesses local structural cues, and its CNN-based discriminator, which focuses on recovering global features. Compared to other deep learning networks like U-Net, traditional GAN, Pix2Pix, CycleGAN, MedGAN, and UP-GAN, P-GAN exhibits superior performance in terms of visual quality and quantitative metrics. This superiority is attributed to P-GAN's ability to handle highly speckled noisy environments where cellular structures are not readily apparent.

Efficiency and Accessibility: P-GAN's Impact on Imaging Time and Data Handling Complexity

Moreover, P-GAN significantly reduces imaging time and data handling complexity. By eliminating the need for sequential volume averaging, P-GAN enables more than a 15-fold increase in imaging locations within the same timeframe. This time-saving aspect is crucial, as data handling after image acquisition is often more resource-intensive than the acquisition process itself. P-GAN also reduces the computational load by minimising the size of raw data, thereby streamlining post-processing tasks. Overall, this integration of AI into the imaging pipeline not only accelerates imaging procedures but also enhances accessibility to AO imaging for clinical purposes. The study emphasises the broader trend of utilising AI to improve biomedical imaging and microscopy, especially in addressing challenges related to speckle noise. Furthermore, the potential applications of P-GAN extend beyond RPE imaging to other areas affected by speckle noise, such as AO-OCT imaging of transparent inner retinal cells and optical coherence tomography angiography.

However, the application of this approach to diseased eyes necessitates addressing challenges such as image interpretation consensus and the creation of appropriate training datasets. Despite these challenges, establishing a larger normative database of healthy RPE images is deemed critical for future comparisons with diseased eyes. The introduction of an AI-assisted strategy to enhance the visualization of cellular details from speckle-obscured AO-OCT images represents a transformative advancement in imaging data acquisition. By facilitating wide-scale visualization and noninvasive assessment of cellular structure in the living human eye while reducing the time and burden associated with data handling, P-GAN brings AO imaging closer to routine clinical application, thereby enhancing our understanding of retinal structure, function, and pathology.

Source: Nature Communications Medicine

Image Credit: iStock