The potential impact of artificial intelligence in radiology is impressive; vendors and major academic centres are developing a wide array of artificial intelligence applications and neural networks to aid radiologists in clinical diagnosis and clinical decision support.

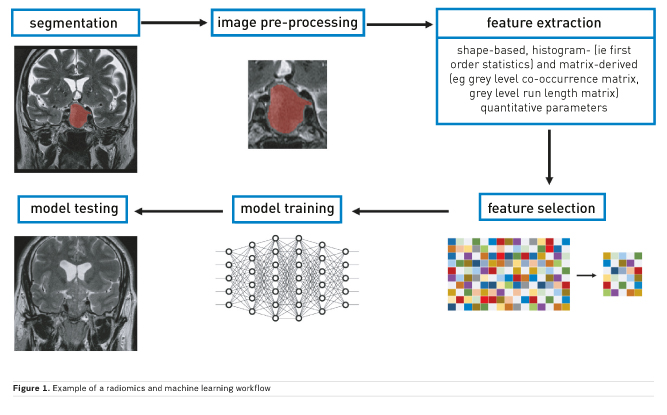

Artificial intelligence (AI) is one of the trending topics in medicine and especially radiology in recent years. Research papers are published every month investigating applications of machine learning in medical imaging, from image acquisition to image interpretation and prognostic evaluation. These can be contextualised in the developing field of radiomics, in which great volumes of quantitative data are extracted from medical images. These have quickly proven difficult to evaluate by traditional statistical means and have little direct applicability in the clinical setting. Thus, researchers have turned to development of AI and computer software that could better employ radiomic data in order to translate them to practical usefulness.

Machine learning is a subfield of AI that is centred on the development of algorithms that give the ability to computers to learn from data and subsequently perform predictions without explicit prior programming (Figure 1). Some of the possible tasks that can be assigned to these are:

- Classification: assignment of a given instance to two or more previously labelled classes

- Regression: classification using a continuous output rather than discrete labels

- Clustering: grouping of inputs without prior given classes

- Density estimation: outputs the spatial distribution of inputs

- Dimensionality reduction: maps instances to a lower-dimensional space.

These are widely used in technological advances outside of medicine. In the context of medical imaging, classification problems are the most widely approached by using machine learning algorithms. For example, lesion differential diagnosis or patient prognosis prediction are common aims of studies.

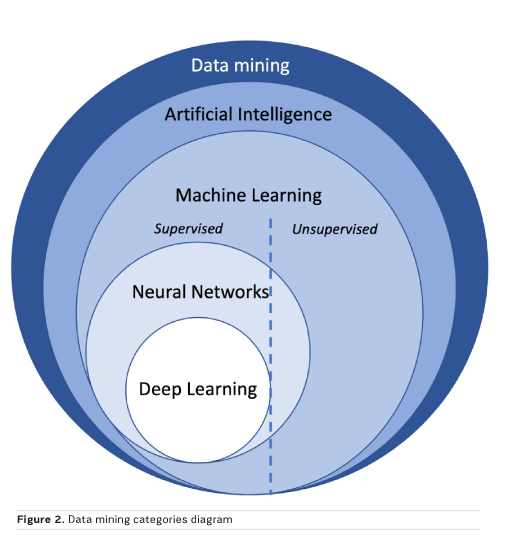

Machine learning algorithms can be subdivided based on the type of learning. In supervised learning, more frequently used for classification in radiology, the data are labelled prior to the training process and these are also used as the reference standard to evaluate algorithm performance in the test set. In unsupervised learning, no prior labels are given, and the software automatically clusters the given inputs. In reinforcement learning, the algorithm learns based on continuous feedback on its performance in the assigned task, in other words learning from its mistakes. Finally, all these approaches may be combined to enhance prediction performance (Figure 2).

Data extraction

Most algorithms require data to be extracted from medical images prior to development of predictive models. The most commonly used techniques for data extraction fall under the umbrella of texture analysis. This evaluates inhomogeneities in images, represented by distribution of pixel or voxel values (eg Hounsfield units for computed tomography, CT, or intensity values for magnetic resonance imaging, MRI). In summary, various statistical formulae are used to extract shape-based, histogram- (ie first order statistics) and matrix-derived (eg grey level co-occurrence matrix, grey level run length matrix) quantitative parameters. The main difference between first and higher order statistics is that the latter also retain information on the spatial distribution of pixel and voxel values. Furthermore, other than from the original image, features may be extracted after application of filters. The most commonly used are Laplacian of Gaussian filters that, based on given sigma values, influence image texture fineness or coarseness and wavelet decomposition, reducing noise and highlighting some texture features. Finally, some algorithms, such as neural networks, do not require any prior data extraction but only input labelling.

Neural networks

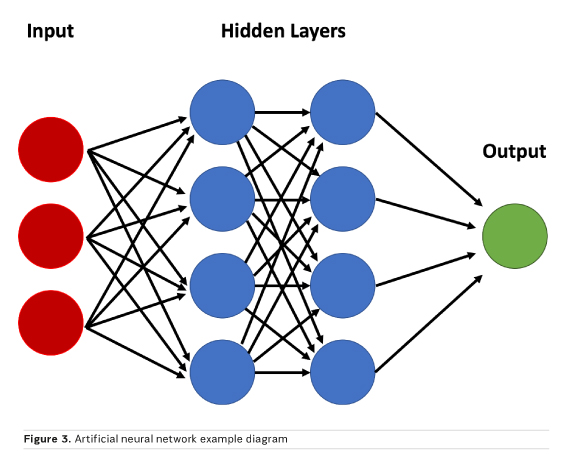

Neural networks are a peculiar subset of machine learning inspired from how the brain is structured, with hidden layers representing interneurons. A simple model may accept image data as a vector composed of voxel intensities, with each voxel serving as an input “neuron”. Next, one must determine how many layers (how deep) and how many neurons per layer (how wide) to include; this is known as the network architecture. Each neuron stores a numeric value, and each connection between neurons represents a weight. Weights connect the neurons in different layers and represent the strength of connections between the neurons. The suitable value of these weights is estimated through the training process, which is necessary to obtain a correct classification. A “fully connected” layer in which all neurons in one layer are connected to all neurons in the next can be interpreted and implemented as a matrix multiplication. The final layer encodes the desired outcomes or labelled states (Figure 3). For example, if one wishes to classify an image as “haemorrhage” or “no haemorrhage,” two final layer neurons are appropriate (Zaharchuk et al. 2018).

Imaging applications

Machine learning applications are not limited to lesion detection, although this remains the focus of most studies in the literature. AI has many possible applications in other aspects of medical imaging, such as image acquisition, segmentation and interpretation, other than detection.

Using AI, it may be possible to capture less data and therefore image faster, while still preserving or even enhancing the rich information content of MR images. This could be possible by training artificial neural networks to recognise the underlying structure of the images, and what types of things tend to be clustered together. Facebook and New York University (NYU) have announced they are exploring how artificial intelligence can be used to make MRI scans 10x faster. NYU will provide Facebook with approximately 3 million MR images of the knee, brain and liver to help train its algorithms, including both images and raw scanner data. Furthermore, an intelligent MR imager may recognise a lesion and suggest modifications in the sequence to achieve optimal characterisation of the lesion.

Automated segmentation of medical images is an interesting application of machine learning. While manual segmentation is still considered the gold standard, it has shown limitations in inter- and intra-reader reproducibility. Various studies have shown the potential of AI in this post-processing task, possibly with higher accuracy and reproducibility. One example is tied to the segmentation of the heart’s left ventricle on 4D MRI exams that allows for a faster, automated assessment of cardiac function (Ngo et al. 2017). In this setting, various commercial products derived from machine learning are already available for clinical practice. Deep learning has been employed for unsupervised segmentation of breast tissue on mammographic exams, useful for reproducible scoring of breast composition, as required by current imaging guidelines (Kallenberg et al. 2016). A third interesting field to which AI has been applied for segmentation is musculoskeletal imaging, aiming to surpass previously used methods based on models and atlases (Pedoia et al. 2016). Probably the field where most advances have been made is neuroradiology. Deep learning networks have shown great usefulness in automated segmentation of not only normal brain tissue and structures but also lesions (Akkus et al. 2017). Finally, interesting results have been shown on automated segmentation of whole-body scans, such as CT exams performed for staging purposes (Polan et al. 2016). The development of automated segmentation technologies provides the basis for further expansion of clinical applicability of machine learning as it contributes to solving one of its main limiting factors: time-consuming post-processing of exams.

Radiologists must examine a large number of images, and therefore support tools for the detection and localisation of relevant findings are desired. Automated detection of findings within medical images based on machine learning applications goes beyond classical computer-aided detection and computer-aided diagnosis, which have been used for decades.

For example, the introduction of lung cancer screening programmes will produce an unprecedented amount of chest CT scans in the near future, which radiologists will have to read in order to decide on a patient follow-up strategy. Several studies have been published exploring but also validating AI tools in the extraction of incidental findings such as pulmonary and thyroid nodules, in breast cancer screening and bone age analysis. Other applications include prostate cancer detection at MRI, determination of coronary artery calcium score, or detection and segmentation of brain lesions (Choy et al. 2018).

Image interpretation can be one of the most challenging moments in daily radiological practice. A high level of expertise and knowledge in a specific field may be required to make the correct evaluation. One of the most common applications of machine learning is tied to the evaluation of pulmonary nodules, with studies showing good accuracy in cancer detection (Causey et al. 2018). Another field in which radiomic evaluation with advanced machine learning algorithms has produced interesting results is the classification of brain tumours. There are many studies showing the potential for molecular subtyping of gliomas using MRI, contributing to the choice of therapeutic approach while potentially avoiding use of invasive techniques (Lu et al. 2018). Breast cancer diagnosis has also shown potential as a clinical application of AI, both on mammographic and MRI exams after radiomic feature extraction. Classification of adrenal lesions is another setting in which machine learning has shown promising results, possibly reducing the need for intravenous contrast administration during MRI exams (Romeo et al. 2018). Currently, there is software already approved for clinical use that can diagnose, for example, the presence of intracranial haemorrhage, prioritising the exam reading in the context of daily practice, or identifying potential stroke patients, pre-alerting stroke unit members prior to regular radiological evaluation, reducing potential time waste in a critical setting.

Conclusion

The increasing inclusion of AI and machine learning applications in the daily radiological workflow could lead to improved quality of life and patient satisfaction. Time-consuming tasks such as image segmentation could be automatised while better support for detection and interpretation of findings can be achieved.