AI-driven image reconstruction in radiology holds great promise for enhancing image quality and improving diagnostic capabilities. However, concerns persist regarding the reliability of AI models, the lack of clinical validation and the risk of unintentional distortions in medical imaging. At the European Congress of Radiology, experts examined key challenges, including the importance of high-quality training data, the risks of AI-based artefact correction and the necessity of robust evaluation frameworks. Their discussions underscored the need for stringent validation processes to ensure AI technologies are safely and effectively integrated into clinical practice.

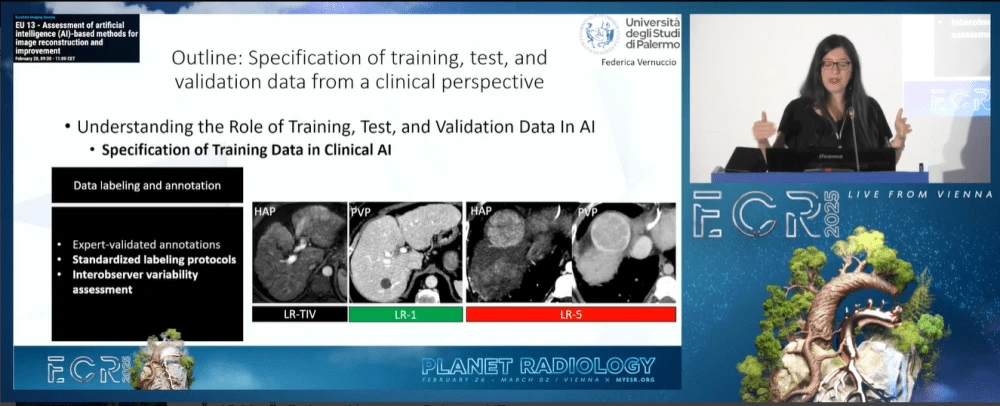

Specification of Training, Test and Validation Data from a Clinical Perspective

Federica Vernuccio, a clinical radiologist from Palermo, emphasised the pivotal role of AI in healthcare, highlighting the importance of developing reliable models that contribute positively to patient outcomes. She cautioned that “if we create a wrong AI model, we will create bad healthcare,” stressing that validation and real-world applicability are critical to ensuring safe AI implementation. Reviewing over 400 AI models developed for cardiovascular disease, she revealed a concerning gap—none of them had undergone external validation, raising serious questions about their clinical utility.

To address this issue, Vernuccio underscored the necessity of using diverse and representative training datasets, standardised labelling protocols and rigorous validation measures. She emphasised that AI models should be tested on prospective data rather than relying solely on retrospective datasets. Furthermore, she called for greater collaboration between hospitals, researchers and AI developers to ensure that models are trained and tested in environments that reflect real-world clinical settings.

Problems of Assessing AI-based CT Image Reconstruction, Denoising or Artefact Reduction

Marc Kachelrieß from Heidelberg, Germany, addressed the complexities of AI-based image reconstruction in CT scans, warning that while AI can generate visually appealing images, it may also introduce inaccuracies that obscure critical anatomical details. One of his main concerns was the concept of "fake spectral CT," where AI generates high-energy images from low-energy scans. He dismissed this approach outright, stating, "Of course that's nonsense because that's not physics."

Kachelrieß also critiqued AI-based motion artefact correction and synthetic contrast enhancement, likening these techniques to Photoshop-like edits rather than genuine image reconstructions grounded in physical principles. Recognising the limitations of existing evaluation techniques, his team developed a new metric to assess AI-denoised images more effectively, ensuring a balanced evaluation of both small and large anatomical structures. He concluded by emphasising the need for more stringent assessment methodologies, warning, "We have to be very careful with the AI methods."

Evaluation of Image Quality after AI-based Image Reconstruction

Mika Kortesniemi from Espoo, Finland, examined the impact of AI-based reconstruction on image quality, exploring both technical and clinical evaluation methods. He stressed the importance of objective, quantitative approaches in assessing AI-generated images, ensuring that modifications do not compromise diagnostic integrity.

Kortesniemi outlined four key AI reconstruction methodologies currently used in commercial imaging applications: raw data enhancement, image-domain AI, hybrid methods and full deep learning reconstruction. Referencing a Duke University study, he highlighted how AI influences noise texture and spatial resolution, demonstrating both its advantages and potential drawbacks.

In a comparative analysis of paediatric head CT scans, Kortesniemi found that deep learning reconstruction outperformed traditional iterative methods, yielding reduced noise and improved contrast resolution. However, he also identified instances of unexpected image alterations, reinforcing the need for comprehensive validation before clinical adoption. To mitigate risks, he advocated for a multifaceted approach combining phantom studies, clinical reviews and in silico trials to verify diagnostic accuracy. He concluded with a firm recommendation: "New AI reconstruction needs clinical validation testing," stressing the importance of continuous quality assurance in AI-driven imaging.

While AI offers significant advancements in image reconstruction, experts cautioned against over-reliance on unvalidated models and misleading enhancements. Without rigorous validation, AI-generated images may introduce unintended distortions that compromise diagnostic accuracy. To ensure AI’s safe and effective integration into radiology, standardised validation protocols, robust testing frameworks,and close collaboration between radiologists and AI developers are essential. Only through stringent oversight and continuous evaluation can AI-driven imaging contribute meaningfully to patient care without unintended risks.

Source & Image Credit: ECR 2025