ICU Management & Practice, Volume 25 - Issue 4, 2025

In today’s rapidly evolving digital era, health information technologies give enormous opportunity and promise to transform patient care. To unlock their full potential, these innovations must be tested with the same scientific rigour as life-saving drugs and devices. This article invites readers to rethink how we evaluate new technologies in healthcare based on a structured framework to ensure it helps to deliver safer, smarter, and more impactful care.

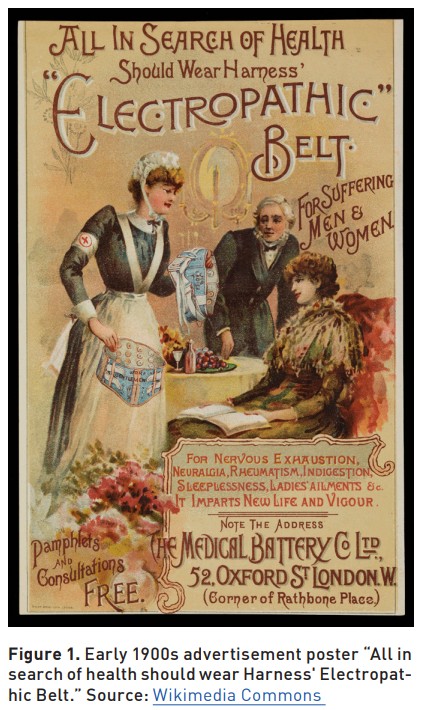

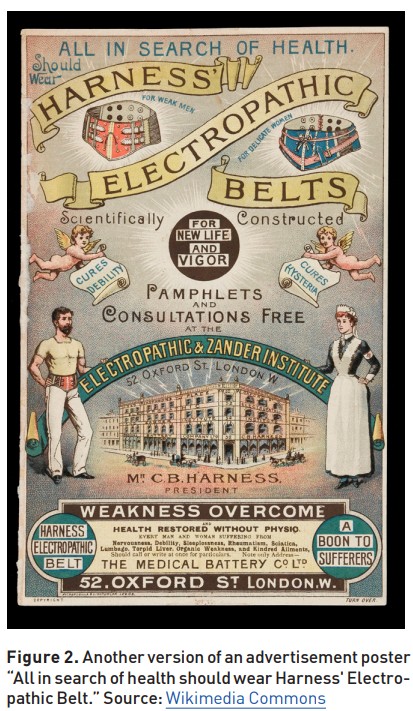

At the beginning of the 20th century, the Heidelberg Electric Belt was a medical hit. These devices used batteries to generate a therapeutic current when wrapped around the body. They were promoted to alleviate a range of conditions, from paralysis to digestive issues and impotence, essentially sold as a general-purpose cure device (Figures 1 and 2). It was distributed worldwide for almost 20 years, with estimated sales reaching hundreds of thousands of copies and was included in many mail-order catalogues.

Around 1910, the American Medical Association and medical press began to more aggressively debunk electric belt claims, which eventually led to a decline in their availability. As medical standards improved, the device was proven ineffective and dropped from legitimate use. It was shown that no real clinical or physiological support for the advertised effects existed.

At that time, no special requirement existed to perform tests of new devices or medications to show benefit to the patient. Manipulated marketing with a chain of endorsements from leading doctors was often used to promote new devices.

Since then, significant progress has been made in regulating pharmaceuticals, consumer products, and medical devices. However, the rapid evolution of health information technology (HIT), including Electronic Medical Records (EMR), Clinical Decision Support Systems (CDSS), and related artificial intelligence (AI) technologies, leaves us in a situation much like the beginning of the 20th century. Vendor-driven case studies and anecdotal reports often shape the narrative of HIT success, yet such accounts can be misleading.

The example above illustrates the dangers of adopting novel technologies without proper scientific validation. It helps us appreciate the importance of evaluation frameworks to protect patients and uphold clinical integrity.

Modern Days: Why Structured Evaluation Matters

Critical care has always been at the forefront of technology adoption - from early waveform monitoring to advanced predictive models for patient deterioration. With this evolution, the importance of evaluation has only grown. Technologies that once offered simple alarms now make complex recommendations. As a result, the field must adapt its evaluation techniques accordingly.

Introduction HIT plays an increasingly critical role in hospital and ICU environments, where clinical decisions are time-sensitive and complex. It is already evident that electronic tools enhance practice and safety, but proper evaluation ensures that these technologies deliver on their promise to enhance care without introducing new risk. In critical care, rigorous evaluation of new HIT aligned with scientific methods is essential for safe and effective use of technologies.

That problem is compounded by the rapid advancement of machine learning (ML) and large language models (LLMs), which have introduced a new class of CDSS. The performance of these models can be dynamic, sensitive to data input variability, and sometimes not easy to interpret. Such complexity and opacity pose new challenges for the evaluation of HIT.

Without controlled studies or a clear implementation context, it is difficult to discern real clinical impact. Anecdotal narratives can mislead as they emphasise success stories while ignoring failures or false positive cases. Vendors use marketing tools designed to build brand reputation and sales. Without scientific evaluation (e.g., peer-reviewed trials), such claims can overestimate benefits and understate risks such as alert fatigue, workflow disruptions or bias in new CDSS. A scientific evaluation framework provides the methodological rigour needed to separate signal from noise. Reliance on unverified testimonials may lead to the adoption of unsafe or ineffective systems.

Traditionally, technical validation was considered a final checkpoint to technology deployment. Today, HIT evaluation must be seen as a rigorous, multidisciplinary scientific discipline. Combining principles from biomedical informatics, human factors engineering, implementation science, and systems design, modern HIT evaluation seeks to generate reproducible evidence that supports both clinical outcomes and operational feasibility.

Initial HIT evaluations focused on the transition from paper to digital systems. These early studies typically measured documentation time, user satisfaction, and basic error rates. While foundational, they lacked the scope to address how HIT influences complex decision-making processes, reproducibility, transparency, and fairness.

The Epic Sepsis Model (ESM) is a modern example of technology that uses promotional materials and hospital press releases to describe its ESM as a powerful tool for early detection of sepsis, claiming improved patient outcomes and reduced mortality rates. An independent evaluation published in JAMA Internal Medicine concluded that the tool had poor sensitivity and clinical utility in the real-world hospital environment. It missed two-thirds of sepsis cases and generated excessive false positives (Wong et al. 2021). Following the critique, promotional materials about the machine learning model for early sepsis detection are now available on the protected Epic User portal, accessible only to authorised users.

Unvalidated technologies and algorithms pose serious risks to patient safety. They can produce excessive false positives, leading to alert fatigue and clinician desensitisation, or miss critical cases, delaying essential interventions. Another challenge is the phenomenon of "pilot purgatory," where promising tools stagnate after small-scale trials due to a lack of generalisable evidence. Many hospital systems are reluctant to scale these tools without a formal evaluation process.

Drawing parallels with pharmaceutical development, HIT should go through phases of evaluation, from concept to post-market monitoring. A staged model ensures each system is vetted across technical, clinical, and operational domains.

What is HIT Evaluation

HIT evaluation differs fundamentally from general IT evaluation because of the clinical context in which it operates. While traditional IT may focus on speed, reliability, and user interface, HIT must be evaluated for its impact on patient safety, clinical workflows, and health outcomes. HIT evaluation is an evidence-generating discipline focused on both the process and outcomes of technology use in healthcare.

Key components include clinical impact (e.g., improved diagnosis or reduced complications), workflow integration (e.g., reduction in cognitive load), safety (e.g., error reduction), usability (e.g., clinician satisfaction), cost-effectiveness, and implementation fidelity. These domains form the backbone of the evaluation matrix and guide the selection of appropriate methods and metrics for each project.

HIT evaluation is built on a wide range of scientific disciplines. While randomised controlled trials (RCTs) are often impractical for HIT interventions, alternative designs, such as interrupted time series, stepped-wedge cluster trials, and pragmatic trials offer robust options. Detailed clinical epidemiology guidance is needed for selecting study designs appropriate that are to the technology and clinical setting. Structured EHR data is foundational but must be supplemented with audit logs, clinician interaction metrics, and outcome indicators such as mortality and length of stay.

Structure of Modern HIT Evaluation

For robust evaluation, no single study can answer all questions. Typically, a five-stage model is needed for systematic evaluation (Herasevich and Pickering 2021):

- Stage 0: Problem Definition and Co-Design – Engage stakeholders to define clinical problems and co-develop technology solutions that meet real-world needs.

- Stage 1: Technical Validation – Evaluate the predictive performance of the tool (ML model) using metrics like AUROC on retrospective datasets.

- Stage 2: Clinical Simulation – Use silent mode testing on real-time data to estimate practical utility before live deployment.

- Stage 3: Real-World Pilot – Conduct a limited-scope deployment to observe initial clinical use and refine the system.

- Stage 4: Implementation and Outcome Evaluation – Measure changes in patient outcomes, clinician behaviour, and system-level metrics.

Evaluating LLMs and Next-Generation CDS

Large Language Models (LLMs, e.g. ChatGPT) introduce unique challenges not seen in traditional CDS tools. Their outputs can vary with slight input changes. Systems are prone to hallucinations and often lack traceable reasoning paths. Evaluation must include dimensions such as output consistency, factual grounding, and prompt sensitivity.

Bias detection, user prompt design, and explainability testing are emerging needs in HIT. It has become evident that standard HIT evaluation metrics are insufficient for LLM testing, and novel approaches such as response quality benchmarking and fairness audits are required.

Finally, regulation has not yet caught up with AI's capabilities. There is a growing need for methodological standards and oversight mechanisms that address dynamic systems.

Conclusion

In modern days, when healthcare is dominated by digital innovations, scientific evaluation is no longer optional. Software tools that influence care must be held to the same evidence standards as drugs and devices. They should be supported by scientific evaluation methodologies in real-world settings.

We call on ICU leaders, clinical informaticians, and developers to embrace evaluation as a core professional obligation. Only through structured, scientific inquiry can we ensure that ML and LLM-based decision support tools truly advance patient care without compromise.

Conflict of Interest

None.

References:

Epic. Saving lives with immediate sepsis treatment [Internet]. Epic Systems Corporation; 2018. Available from: https://www.epic.com/epic/post/saving-lives-immediate-sepsis-treatment/

Herasevich V, Pickering B. Health information technology evaluation handbook: from meaningful use to meaningful outcomes. 2nd ed. CRC Press (HIMSS Book Series); 2021. p. 198. ISBN: 9780367488215.

Wong A, Otles E, Donnelly JP, et al. External validation of a widely implemented proprietary sepsis prediction model in hospitalized patients. JAMA Intern Med. 2021;181(8):1065-70.