Pancreatic ductal adenocarcinoma remains one of the deadliest malignancies, largely due to its frequent diagnosis at advanced stages. While contrast-enhanced computed tomography remains the standard imaging method for pancreatic cancer evaluation, nearly 40% of early-stage tumours can still go undetected. Deep learning approaches have emerged as a promising solution to this diagnostic gap, offering enhanced capabilities in identifying subtle imaging patterns and improving detection accuracy. These models have rapidly evolved, demonstrating encouraging performance in classification, segmentation and hybrid tasks related to pancreatic tumour recognition. However, despite technical progress, several challenges continue to hinder clinical integration.

Model Capabilities and Clinical Potential

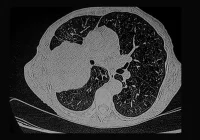

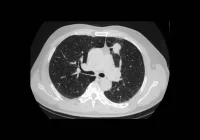

Deep learning techniques are increasingly employed to classify and segment pancreatic lesions in CT scans, learning complex spatial patterns directly from image data. These models operate using convolutional layers that extract features such as texture and density, which may be imperceptible to the human eye. Semantic segmentation models further delineate tumours from surrounding structures at the voxel level, offering precise lesion localisation. Innovations such as U-Net architectures and Vision Transformers have enabled models to simultaneously capture contextual information and fine detail, with some models incorporating attention mechanisms to weigh feature relevance. Hybrid approaches integrating radiomics and deep learning pipelines are also showing promise in enhancing both accuracy and interpretability. Collectively, these capabilities position deep learning as a valuable aid in flagging lesions that might otherwise go unnoticed, particularly in non-contrast CT scans.

Notable developments include segmentation-based architectures that not only identify abnormalities but also assess their probability of malignancy. Comparative studies have shown that deep learning can match or even exceed radiologist performance, especially in cases involving small or visually inconspicuous tumours. Some systems have achieved high sensitivity scores in large validation cohorts, providing further evidence of their potential. However, early-stage lesions, especially those under two centimetres, remain particularly difficult to detect. Performance in small tumour recognition tends to drop significantly across various models, underscoring the importance of continued optimisation in this area.

Challenges in Generalisation and Reproducibility

Although the technical accuracy of deep learning models is improving, significant barriers exist when transitioning from research environments to routine clinical use. One of the most critical challenges is the limited generalisability of models trained on narrow datasets. For instance, performance can decline substantially when models are applied to patient populations or imaging conditions different from their training data. Anatomical variations, rare tumour types or differences in image acquisition protocols can all affect detection performance. Publicly available segmentation models have been shown to miss or misidentify tumours in cases involving anatomical anomalies such as fat infiltration, revealing a need for more robust training strategies and diverse datasets.

In addition to generalisation issues, reproducibility remains a concern. Variability in model development practices, including annotation protocols and evaluation metrics, contributes to inconsistent performance across studies. Many models lack external validation or standardised reporting, making it difficult to compare or replicate findings. Efforts to address these limitations include proposed reporting checklists and multi-institutional benchmarks that promote transparency and consistency. These initiatives aim to ensure that models deliver reliable and reproducible results in varied clinical settings. Nevertheless, discrepancies in labelling standards and ground truth definitions remain a source of bias, and ongoing validation across different institutions will be essential.

Must Read: Imaging the Vanishing Pancreas

Another obstacle is the limited interpretability of model predictions. The so-called ‘black box’ nature of deep learning creates difficulties in tracing decision-making pathways, which in turn impacts clinician trust. This challenge is compounded by concerns about false positives and unpredictable model behaviour. To mitigate these risks, explainability techniques such as saliency maps and attention mechanisms are being developed. These tools help clinicians understand which image regions influence model outputs, fostering trust and aiding in clinical interpretation. However, full transparency in model decision-making remains an ongoing objective.

Expanding the Diagnostic Framework

Improving detection models further will require expanding both the scope of input data and the underlying model architecture. Emerging research suggests that combining deep learning with other diagnostic modalities could enhance performance. For example, integrating CT imaging with data from electronic health records enables models to detect patterns in symptom evolution, laboratory values or treatment history that may precede anatomical signs. Similarly, systemic imaging biomarkers, such as changes in body composition or signs of sarcopenia, can serve as early indicators of disease and enhance risk stratification.

Liquid biopsy offers another complementary avenue. While circulating tumour DNA and protein markers show potential for early diagnosis, they currently lack sensitivity when used alone, particularly in early-stage disease. However, their integration with imaging features within multimodal AI systems may compensate for these limitations, enabling earlier identification of malignancies. Combined approaches can interpret subtle molecular changes alongside anatomical features, potentially raising diagnostic confidence. The same principle applies to the integration of genomic data, where comprehensive AI frameworks might identify relevant mutations and correlate them with radiological findings.

Advances in imaging hardware, such as photon-counting CT, offer higher spatial resolution and improved contrast, which could enhance the quality of training data for deep learning. Meanwhile, federated learning approaches could enable institutions to collaboratively build models without sharing patient data, preserving privacy while diversifying training sets. This could be particularly impactful in rare or complex cases, where data volume at a single institution may be insufficient for robust training. Collectively, these strategies point towards a future where multimodal, interpretable AI systems support radiologists in achieving earlier and more accurate pancreatic cancer diagnoses.

Deep learning is reshaping the landscape of pancreatic cancer detection, offering tools that can enhance diagnostic accuracy and identify subtle lesions in CT imaging. Models trained to detect early-stage tumours show encouraging results, particularly in their ability to process complex patterns and deliver precise lesion localisation. Yet, widespread clinical application remains constrained by challenges in model generalisability, reproducibility and transparency. To fully realise the potential of these technologies, continued validation, standardisation and integration into radiology workflows are essential.

Future directions include building comprehensive, multimodal AI systems that incorporate imaging, clinical records, molecular data and systemic biomarkers. Using more inclusive datasets and advanced imaging techniques, the models could play a pivotal role in addressing one of oncology’s most urgent diagnostic challenges. The next step lies in translating technical innovation into clinically actionable tools that can improve survival outcomes through earlier, more reliable detection of pancreatic cancer.

Source: Radiology Advances

Image Credit: iStock