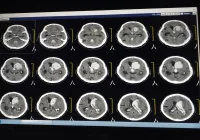

The increasing workload of radiologists has significantly contributed to a rise in reporting errors, posing risks to patient care and diagnostic accuracy. The process of generating radiology reports consists of detecting abnormalities in images and documenting these findings accurately. Errors can occur at both stages, with some resulting from misinterpretations and others stemming from inconsistencies or factual inaccuracies in report documentation. The growing reliance on artificial intelligence in medical applications has led to the exploration of large language models (LLMs) as potential proofreading tools. OpenAI’s GPT-4 has demonstrated promise in identifying and revising errors in radiology reports, offering a possible solution to improve accuracy and efficiency. A recent review published in Radiology evaluates the feasibility of GPT-4 in proofreading head CT reports by assessing its error detection, reasoning and revision capabilities in comparison with human readers.

Evaluating GPT-4’s Proofreading Performance

The study assessed GPT-4’s performance using a dataset of 10,300 head CT reports from the MIMIC-III database. Two experiments were conducted: the first involved a subset of 600 reports, half of which contained intentional errors, to optimise GPT-4’s settings and evaluate its error detection accuracy. The second experiment tested GPT-4 on 10,000 reports deemed error-free by radiologists to analyse its false-positive rate. GPT-4 demonstrated a strong ability to detect factual inconsistencies, with an 89% sensitivity rate for factual errors and an 84% sensitivity rate for interpretive errors. This suggests that the model was highly effective at identifying discrepancies in report content, such as misreported lesion locations or incorrect numerical measurements. Additionally, GPT-4 performed error reasoning and revisions with high quality, receiving strong ratings for both tasks.

While the model’s proofreading capability was notable, it was not flawless. GPT-4 exhibited limitations in distinguishing clinically significant findings from minor details, leading to a higher rate of false positives. When applied to the error-free dataset, it incorrectly identified errors in 1.79% of reports, with a low positive predictive value of 0.05. However, 14% of these false-positive results led to beneficial revisions, such as improved report clarity, grammatical corrections and the addition of missing but clinically relevant details. The study’s findings highlight that GPT-4, despite its limitations, can serve as a valuable proofreading tool for radiology reports.

Comparison with Human Readers

To assess GPT-4’s performance against human readers, the study included a comparative evaluation involving radiologists and neurologists. The human readers reviewed a subset of 400 reports, including both unaltered and error-applied versions. The results indicated that while human readers had a stronger ability to interpret findings in context, they exhibited lower sensitivity in detecting factual inconsistencies. The sensitivity of human readers for factual errors ranged from 0.33 to 0.69, significantly lower than GPT-4’s 0.89. However, for interpretive errors, human performance was comparable to GPT-4, with some radiologists demonstrating superior detection capabilities.

Another key finding was the significant difference in proofreading speed. GPT-4 completed each proofreading task in an average of 15.5 seconds, whereas human readers took between 82 and 121 seconds per report. This efficiency advantage suggests that GPT-4 could alleviate some of the workload associated with radiology reporting, particularly in detecting factual errors that might otherwise be overlooked. Nonetheless, the model’s tendency to overreport findings underscores the need for human oversight. False positives identified by GPT-4 were more frequent than those detected by human readers, often involving minor or clinically insignificant details. While some of these false-positive revisions were beneficial, others added unnecessary information to reports.

Challenges and Future Implications

Despite its strengths, GPT-4 faces several challenges in practical clinical application. One of the primary concerns is its difficulty in prioritising the clinical significance of findings. Radiologists not only ensure factual consistency but also make critical judgments about which findings are most relevant to patient care. GPT-4, while effective at identifying inconsistencies, lacks the ability to assess the relative importance of different observations. As a result, its sensitivity tends to decline when reports contain multiple impressions, as it struggles to organise findings coherently.

The study also highlights the model’s high false-positive rate, which could impact its integration into radiology workflows. While GPT-4’s revisions sometimes improved report clarity, excessive false-positive detections could introduce unnecessary edits, potentially leading to inefficiencies rather than improvements. Addressing this limitation may require refining GPT-4’s prompt design or fine-tuning the model using domain-specific training datasets. Future research should explore methods to reduce false positives while maintaining the model’s high sensitivity to factual errors. Additionally, integrating AI proofreading tools with structured report generation systems could enhance workflow efficiency while mitigating GPT-4’s weaknesses.

GPT-4 demonstrates considerable potential as a proofreading tool for head CT reports, particularly in detecting factual errors and enhancing reporting efficiency. Compared with human readers, it exhibited significantly higher sensitivity in factual error detection while completing tasks at a much faster rate. However, its inability to prioritise clinically significant findings and its tendency to generate false positives highlight the need for human oversight. While GPT-4 is not a replacement for radiologists, it can serve as a valuable adjunct tool, assisting in error detection and reducing the cognitive burden of proofreading. With further refinement, AI-driven proofreading could become an integral component of radiology workflows, contributing to improved accuracy and efficiency in medical reporting.

Source: Radiology

Image Credit: iStock