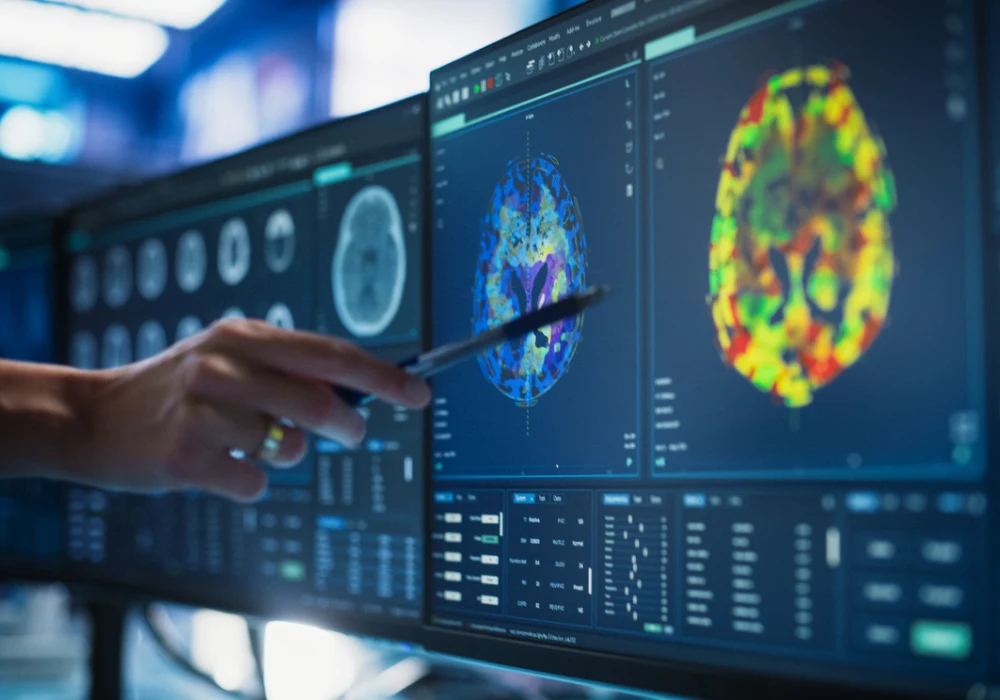

Generative artificial intelligence has become a major force in medical imaging, with capabilities extending far beyond traditional diagnostic support. By creating synthetic datasets that mirror real-world medical images, these models offer new ways to expand research resources, enhance clinical training and facilitate collaboration while preserving patient privacy. Their impact is particularly visible in radiology, where innovations in synthetic imaging are influencing both research and practice. Yet, alongside this promise are questions of quality, interpretability, fairness and ethics that must be addressed to ensure their safe and effective adoption in healthcare.

Building Synthetic Datasets

Synthetic data in medical imaging refers to datasets created through generative models rather than collected directly from patients. These datasets are designed to replicate the statistical distribution of real images while protecting sensitive information. Generative models achieve this by learning patterns from real data and then producing synthetic outputs that closely resemble them.

Two major categories of generative models dominate this field: physics-informed and statistical. Physics-informed models embed domain-specific principles, such as tissue biomechanics or radiation physics, to simulate realistic biological processes. Statistical models, including variational autoencoders, generative adversarial networks and diffusion models, rely on data patterns to generate new images. Each approach balances speed, quality and diversity differently, creating a “trilemma” for researchers choosing the most suitable model.

Must Read: The 3D Revolution in Medical Imaging and Diagnosis

These synthetic datasets are not only used to train classifiers or segmentations but also to transform images. For example, generative approaches can denoise low-dose CT scans, reduce MRI acquisition times or even generate missing sequences. More advanced techniques allow inpainting, selectively adding or removing features from images, which is particularly useful in enriching datasets with rare pathologies. Such applications underline the potential of synthetic data as both a supplement and, in some cases, a substitute for real imaging data.

Expanding Applications in Healthcare

The use cases of synthetic imaging are growing rapidly. One clear benefit is the ability to expand datasets for training artificial intelligence algorithms. Classifiers trained on both real and synthetic data often show improved performance compared with those trained on real data alone. In some cases, synthetic datasets can even match the performance of real ones, reducing dependence on scarce or sensitive patient data.

Synthetic images also enhance fairness in AI systems. By generating additional examples from underrepresented populations or rare conditions, they reduce disparities in model accuracy across demographic groups. Similarly, synthetic data is being used to simulate counterfactuals, enabling researchers to stress-test models under different conditions, such as altering acquisition parameters or removing confounding features.

Another promising application lies in modelling biological processes. Generative models trained on pre- and postoperative radiographs, for instance, can simulate surgical outcomes with remarkable accuracy. They can also model disease progression, such as tumour growth in MRI scans, offering potential value in prognosis and treatment planning. Beyond research, these models can serve as educational resources, giving trainees access to diverse, realistic cases without exposing patient information.

Challenges and Future Directions

Despite these opportunities, generative artificial intelligence in medical imaging raises significant challenges. Patient privacy remains a central concern, as models can unintentionally replicate images from their training data, risking reidentification. New privacy metrics and anonymisation techniques are being developed, along with content provenance standards to mark synthetic outputs.

Another difficulty lies in the opacity of these models. Their complex mechanisms make it hard to understand how outputs are generated, limiting trust among clinicians. Efforts are underway to improve interpretability through uncertainty measures and interactive visualisation tools. Bias is another pressing issue: if training datasets underrepresent certain groups, generative models risk amplifying these inequalities. Strategies such as diversity-aware sampling and fairness constraints are needed to mitigate this risk.

Future directions point towards standardised evaluation frameworks tailored to healthcare, ensuring that synthetic data is both clinically relevant and technically sound. Hybrid models combining physics-informed and statistical methods may improve accuracy and reliability. Regulatory frameworks are also evolving. The US Food and Drug Administration has already cleared some synthetic imaging technologies, requiring rigorous validation to prove performance equivalence with conventional imaging. Such regulatory precedents suggest a structured path for broader clinical adoption.

Generative artificial intelligence is reshaping the field of medical imaging by producing synthetic data that can expand research, improve fairness and model complex biological processes while protecting privacy. Its applications span from enhancing datasets and accelerating imaging workflows to supporting education and prognosis. However, its safe integration into healthcare depends on addressing privacy risks, improving transparency and mitigating bias. With ongoing research, evolving regulation and careful implementation, generative artificial intelligence holds the potential to transform imaging practice and patient care.

Source: The Lancet Digital Health

Image Credit: iStock