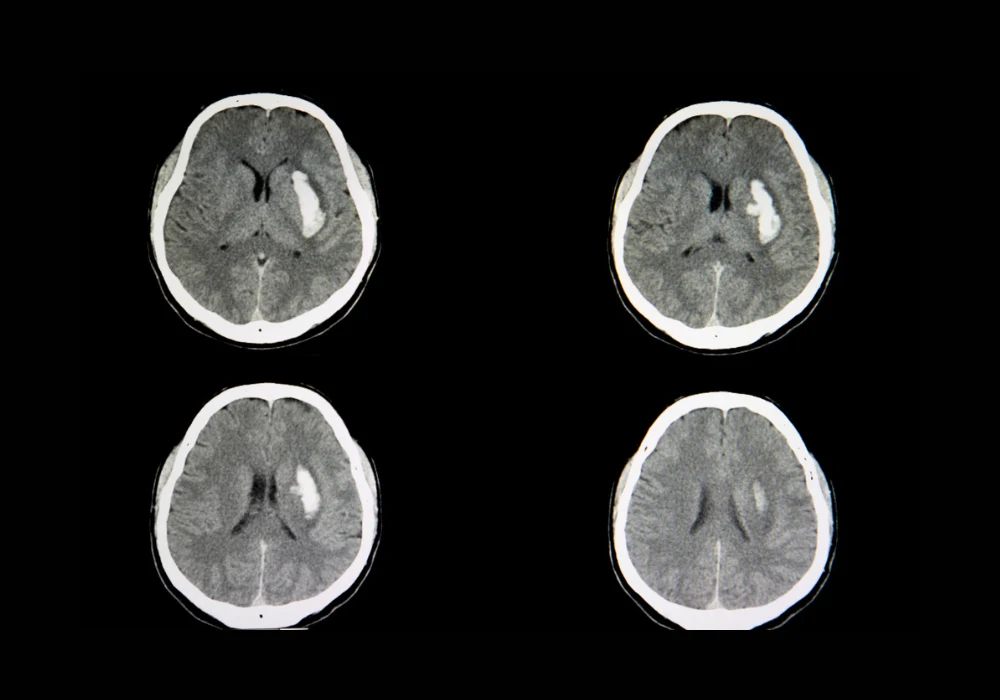

Artificial intelligence has become an integral part of radiology, particularly in high-demand settings such as emergency and teleradiology services. Non-contrast head CT scans are central to evaluating acute emergencies including intracranial haemorrhage, a condition with a 44% mortality rate within 30 days and a recovery rate of only 20% at six months. These figures underscore the urgency of rapid and accurate diagnosis. However, off-hour workloads raise the risk of diagnostic error, prompting growing reliance on AI-based detection systems to support radiologists. While such tools demonstrate high sensitivity and specificity, their performance is not fixed and may drift over time due to evolving clinical practices, demographic changes or equipment variability. Continuous monitoring therefore becomes essential. A recent evaluation of a commercial AI system for haemorrhage detection, supported by a large language model, highlights the potential of combining algorithmic performance with scalable monitoring strategies to improve clinical reliability.

Assessing the Role of Language Models

The multi-centre evaluation analysed 332,809 head CT scans from 37 radiology practices across the United States between December 2023 and May 2024. Of these, 13,569 were flagged as positive for haemorrhage by the Aidoc AI system. To validate performance and establish ground truth, 200 randomly selected positive cases underwent review by experienced radiologists who were blinded to AI outputs. ChatGPT-4 Turbo, deployed on a HIPAA-compliant platform, was used to extract haemorrhage-related findings from the Impression sections of radiology reports. Prompt engineering was refined through testing on over 1,000 reports to ensure optimal accuracy in classification.

Must Read: Rapid CT Segmentation in Brain Haemorrhage

When assessed against radiologist-confirmed cases, ChatGPT achieved a positive predictive value of 1 and a negative predictive value of 0.988, with overall accuracy of 0.995 and an area under the curve of 0.996. Only one false negative was identified, caused by a report in which the relevant description appeared outside the Impression section. No false positives occurred. These results demonstrate that a large language model can reliably extract structured data from free-text reports without the need for extensive human annotation, significantly reducing the resources required for post-deployment monitoring. In this evaluation, ChatGPT achieved robust concordance with ground-truth data, highlighting its potential as a scalable tool for continuous oversight of deployed AI systems.

Variability in AI System Performance

Aidoc demonstrated strong diagnostic accuracy but generated false positives influenced by several clinical and technical factors. Statistical analysis showed that scanner manufacturer played a critical role. Philips scanners were associated with a significantly higher false positive rate than GE Medical Systems, with an odds ratio of 6.97 in multivariate analysis. Imaging artefacts also contributed, with an odds ratio of 3.79, while the presence of neurological symptoms increased false positives with an odds ratio of 2.64. In contrast, midline shift and mass effect reduced the likelihood of false positives, suggesting that larger or more evident haemorrhages enhanced detection reliability.

Radiologists using Aidoc achieved a sensitivity of 0.936 and specificity of 1, with accuracy of 0.96 and an area under the curve of 0.98. However, discrepancies were noted. Seven haemorrhages were missed by radiologists but correctly identified by the AI system, most of them during night shifts in emergency rooms. This reflects the impact of workload on diagnostic accuracy and highlights where AI assistance can reduce error. At the same time, the system produced 71 false positives that radiologists identified as negative, and one case demonstrated automation bias when a radiologist deferred to an incorrect AI-positive classification. These findings indicate both the potential benefits and the safety concerns of integrating AI into clinical workflows.

Clinical Implications and Safety Considerations

The evaluation underscores the importance of continuous monitoring to identify performance drift and safeguard patient care. By applying a large language model to track outputs across extensive datasets, it becomes possible to detect variations in accuracy influenced by scanner type, artefacts or clinical presentation. Such monitoring provides a practical method for healthcare providers to assess whether AI tools are maintaining reliability after deployment.

At the clinical level, combining AI outputs with radiologist expertise improved detection rates, reducing the likelihood of missed haemorrhages during high-volume or off-hour workloads. Yet the risks of over-reliance were also evident. Automation bias, even when observed in a single case, illustrates the need for safeguards such as confidence scores and explanatory overlays to ensure that radiologists remain the final arbiters of diagnosis. The results also highlight variability between imaging systems, reinforcing the importance of accounting for vendor differences and technical parameters when implementing AI. Furthermore, the evaluation points to the necessity of data privacy safeguards when deploying large language models in healthcare. Given the sensitivity of medical information, compliance with HIPAA and robust anonymisation processes are essential for secure and responsible integration.

The evaluation demonstrates that large language models can provide an effective framework for monitoring AI tools after clinical deployment. ChatGPT-4 Turbo achieved high accuracy in extracting haemorrhage-related findings from radiology reports, offering a scalable and resource-efficient approach to performance oversight. While the Aidoc system showed strong results in haemorrhage detection, variability linked to scanner type, artefacts and clinical context highlights the importance of ongoing monitoring and refinement. Combining AI systems with expert interpretation can improve diagnostic accuracy and support timely decision-making, particularly in emergency settings. However, ensuring safety requires strategies to mitigate over-reliance and safeguard patient data. As AI adoption in radiology expands, robust monitoring frameworks will be essential to maintain clinical effectiveness and patient trust.

Source: Academic Radiology

Image Credit: iStock