HealthManagement, Volume 14 - Issue 2, 2014

Author:

Quality is the new frontier in radiology and the necessary step to

improve patients’ outcomes and quality of care; more and more institutions,

regions and nations have stated improved quality as their goal, and make it

part of their mission statement. Quality control must be implemented at all

steps of the imaging chain, from acquisition to reporting. In this article, we

will look at two different approaches to Quality Assurance, starting with the

evaluation of appropriateness of use of equipment and technical quality of the

exams performed in Turkey, followed by radiologists’ reviews in Canada.

The Turkish Approach to Quality: Equipment and Appropriateness

With improving imaging techniques, the number of radiological procedures has increased all over the world with time. However, accurate medical reporting depends on the true protocols and also quality of these examinations. In addition overuse of x-ray techniques can cause damage to the population. Before using any diagnostic modality, two important questions should be asked. Firstly, “Which modality should we select?” and secondly, “How do we use the image protocols according to the potential disease?” For these reasons, the Turkish Society of Radiology (TSR) and Health Ministry Department of Health Services have carried out a project with a working team*composed of members from the TSR and the Ministry of Health.

This project has included three main modality practices: CT, MRI and mammography. The surveillance project has been extended to state hospitals, university hospitals, private hospitals and outsourced health services of both state and university hospitals, which don’t cover required examinations. With regard to the distribution age ratio of machines In Turkey, more than 60% of CT, MR and mammography machines were under five years old and 90% of them were under 10 years old.

The first step was to prepare the different electronic evaluation forms according to modalities. These forms have three parts. The first part was the evaluation of selected modality indication according to clinic diagnosis. Generally this part gave us the evaluation of the clinical physician aspect. The second part was the examination of radiological technique protocols and quality. The last part was centred on the medical reports. The second and last parts related to the radiographers and radiologists respectively.

Once the forms were ready, one coordinator for each hospital was qualified by the Department of Health Services of the Health Ministry for selecting the radiological examination examples from their own centre. Meanwhile technical standards of radiological examinations, which were prepared by the Standards Committee of the Turkish Society of Radiology, were sent to the auditors, who were selected by the Turkish Society of Radiology. Auditor numbers were 153, 127 and 34 for CT, MRI and mammography, respectively. 4536 CDs of CT, 4432 CDs of MR and 1667 CDs of mammography were sent to inspectors by post. Only digital mammograms were evaluated in this project. C R Mammograms were excluded. The scores of results were evaluated by Pearson’s chi-squared test.

CT Quality Results

3183 of 4536 CT studies from 200 hospitals were scored by 155 radiologists. Distribution of examination areas was 55.5% for neuroradiology, 20.7 % for abdominal radiology, 18.5% for thoracic radiology and 5.3% for other systems. Indication criteria were adequate with the standards guidebook in 83.2% of all CT examinations.

In total 72.4 % of exams were adequate. University hospitals had the best results with an 84.3% score. Private hospitals and state hospitals results were 69.2% and 67.6% respectively.

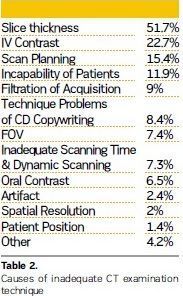

The major cause of unqualified technique was found to be inadequate slice thickness (51.7%). The other reasons were using contrast material as 22.7%, scanning plan as 15.4%, incapability of patients as 11.9%, filtration of acquisition as 9%, technique problems of CD copywriting as 8.4%, field of view (FOV) as 7.4%, and inadequate scanning time and dynamic scanning, not using oral contrast material, artifact, spatial resolution, patient position etc.

MR Quality Results

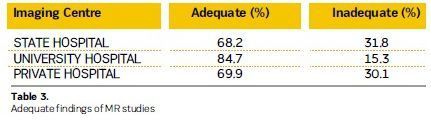

3069 of 4432 MR studies from 193 hospitals

were scored by 146 radiologists. T he distribution of examination areas was

70.5% for neuroradiology, 21.1 % for musculoskeletal radiology, 4.5% for

abdominal radiology and 2.9% for other systems. The indication criteria

complied with the standards guidebook in 83.9% of all MR examinations. In total

73 % of exams were adequate. University hospitals had the best results with an

84.7% score. Private hospitals and state hospitals results were 69.9% and 68.2%

respectively.

The major cause of unqualified technique was found as lack of sequence at 44.6% range (see Table 4). The other reasons included slice thickness as 39.1%, FOV 23.2%, image resolution as 16.2%, incapability of patients as 12.4%, scanning plan as 10.6%, using IV contrast agent as 7.9%, using inadequate coil as 7.4%, lack of advanced MR techniques, dynamic study, inadequate scanning time, artifact, technique problems of CD copywriting, etc.

Mammography Quality Results

998 of 1667 mammogram from 165 hospitals

were scored by 34 radiologists. The indication criteria complied with the

standards guidebook in 8 7.9% o f a ll m ammograms. In total 43.9 % of exams

were adequate. University hospitals had the best results with a 75.2% score

(see Table 5). Private hospitals and state hospitals results were 20.9% and 68.2%

respectively.

The major cause of unqualified technique was found as inadequate patient position at 75.2% range. The other reasons were inadequate contrast density as 33.8%, artifacts as 28.3%, absence of patient information on the mammogram CD as 16.6%, inefficient compression as 12.8%, i ncapability o f p atients as 4.9%, technique problems of CD copywriting as 3.3%, and bucky problems as 2.4%.

Quality Improvements: Next Steps

According to the results of the

radiological quality evaluation project in Turkey, the Ministry of Health and TSR

have decided to take precautions in the next period. 1. Radiological quality

evaluation will be repeated 1-2 times in a year; 2. According to the poor

points of the results, certification programmes will be prepared for the radiographers.

These summarised reports were provided from the first pilot study. More detailed results will be published in due course.

Working Team Members TSR members: Dr.Muhtesem Agildere ( Professor, Baskent University, Department of Radiology, Ankara), Dr.Selma Uysal Ramadan (Assoc.Professor, Keçiören Training and Research Hospital, Department of Radiology, Ankara), Dr.Levent Araz (Numune Training and Research Hospital, Department of Radiology. Ankara) Dr.Erkin Aribal (Profesor, Marmara University, Department of Radiology, Istanbul), Dr.Alper Dilli (Assoc.Professor, Diskapi Yildirim Beyazıt Training and Research Hospital, Ankara), Dr.Levent Altin (Numune Training and Research Hospital, Department of Radiology. Ankara)

Ministry of Health members: Aydin Sari, Merter Bora Erdogdu, Meltem Ercan, Aysun Yildirim The team coordinators were Dr.Nevra Elmas from TSR and Dr. rfan Sencan from the Health Ministry of Turkey.

The Canadian Experience: Assessing the Radiologist’s Report

Multiple reviews of radiology

reporting have taken place throughout the country, mandated by local health

authorities, chiefs of staff or department chairmen. The process culminated in

British Columbia (BC) a few years ago, when the CT reporting activity of two

radiologists was reviewed, demonstrating high levels of discrepancy, with more than

30% significant errors, which could potentially harm patients.

This series of highly publicised radiologists’ reviews in Canada has raised awareness of the need for a process to evaluate the quality and accuracy of the reports in the best interest of our patients and institutions. The American College of Radiology’s RadPeer™ system has been in existence for more than 14 years, but does not answer to requirements any more, as the retrospective method on which it is based does not protect our community from errors, which may have disastrous consequences. To address this issue, the BC Minister of Health Services requested in February 2011 an independent two-part investigation into the quality of diagnostic imaging, and asked Dr. Doug Cochrane, Provincial Patient Safety and Quality Officer, to lead this review. The first part of the report centred on the credentials and individual experience of the radiologists involved. The second part of the report provided a description of the events, a review of quality assurance and peer review of medical imaging, a review of physicians’ licensing and credentialing, and issued 35 recommendations (Cochrane 2011). Among the recommendations pertaining to the provision of diagnostic imaging services, six were specific to quality assurance and peer review in diagnostic imaging as follows:

• That the health authorities and College develop a comprehensive retrospective review process that can be used in the health system or the private sector (recommendation 15);

• That the College and the health authorities develop a standardised retrospective peer review process designed for quality improvement in the health authorities and private facilities (recommendation 16);

• That the Provincial Medical Imaging Advisory Committee (MIAC) establish a provincial management system for diagnostic imaging peer review in BC and that this system oversees both concurrent and comprehensive retrospective peer review processes (recommendation 17);

• That each health authority establish a Diagnostic Imaging Quality Assurance Committee to provide oversight for diagnostic imaging services provided by the health authority (recommendation 18);

• The British Columbia Radiology Society (BCRS) establish a teaching library of images reflecting difficult interpretations and common errors (recommendation 19);

• That BCRS, the Canadian Association of Radiologists (CAR) and the Royal College establish modality-specific performance benchmarks for diagnostic radiologists that can be used in concurrent peer review monitoring (recommendation 20);

This was the first initiative to implement a province-wide quality assurance programme for diagnostic imaging, focused on the quality of reports. It led to a provincial request for proposal (RFP) for a digital solution to enable the reviews. Three years after the vendor was selected, based on the Cochrane recommendations, only one of the six regions in British Columbia has a programme implemented. The solution implemented is based on the retrospective RadPeer™ system. A second province, Alberta, has also issued an RFP for a region-wide quality assurance solution based on the same requirements as in British Columbia.

There is no large-scale initiative in Canada otherwise and other jurisdictions are considering the opportunity to engage in such processes. The Canadian Association of Radiologists (CAR) has published a guide to peer review systems, which gives a list of recommendations for successful quality assurance implementation (O’Keeffe et al. 2011). The Ontario Association of Radiologists (OAR) has issued its own recommendations, similar to the CAR ones. Both support not only the current retrospective model, but also a new prospective concept that allows pre distribution review of diagnostic imaging studies.

At McMaster University, we have initiated a prospective quality assurance pilot, the first in the country. The pilot’s prospective nature allows for completion of QA activities before the report is finalised for the referring physician and made available in the Radiology Information System (RIS) and Picture Archiving and Communication System (PACS). The peer review solution combines the benefits of ‘multi-ology’ diagnostic peer review/quality assurance with the productivity benefits of single or cross-institutional workflow management/workload balancing, faster report turnaround time and improved overall healthcare system capacity into a single integrated platform. It has been developed based on previous large-scale reviews conducted in other provinces.

The pilot started in October 2013 with 11 radiologists participating across four sites, and features 400 CT, 100 MRI and 100 ultrasound cases across a four month timeframe. The reports made by a radiologist from a given hospital are not sent for review to a radiologist at the same site. For non-urgent elective exams, the maximum turnaround time is 48 hours. In case of discrepancy, the cases are referred to an arbitration committee.

The goals of this pilot are to:

• Determine the benefits in prospective QA workflows;

• Evaluate automated versus ad hoc QA sampling methodologies;

• Validate the feasibility of automated QA processes;

• Produce a recommendation report;

• Develop a centre of excellence in radiology peer review. The expected pilot outcomes are:

• Proactive, pre-report distibution peer For infants and children, use scales that can be set to provide weights in kilogrammes only, and verify that the scales are clearly labelled as such.

- Whenever possible, use paediatric-specific technologies rather than using adult-oriented technology off-label or employing workarounds.

- If obtaining paediatric-specifi technology i s n ot a n o ption, investigate whether an available device can be safely and effectively used on children. Alternatively, ask if the vendor can refer you to current users of the technology who have implemented the system in a manner that addresses the needs of paediatric patients.

- Consider identifying a paediatric technology safety coordinator to assess both the adultoriented technologies and the adult-paediatric hybrid technologies that are being used on pediatric patients at your facility. Responsibilities may include:

- Identifying devices, accessories, or systems that are appropriate for only a certain range of patients (e.g., adults but not children)

- Identifying devices, accessories, or systems that must be used in a specific configuration to safely accommodate paediatric patients (e.g., restricting the upper flow rate for infusion pumps)

- Where appropriate, clearly labelling any such devices

- Educating staff about unique safety considerations or methods of use that are required when working with paediatric patients

- Establishing protocols for setting medical device alarms to levels that are appropriate for paediatric patients and periodically verifying that these protocols are being followed.

Data Integrity Failures in EHRs and Other Health IT Systems (no. 4)

Reports illustrate myriad ways

that the integrity of the data in an EHR or other health IT system can be

compromised, resulting in the presence of incomplete, inaccurate, or out of date

information. Contributing factors include patient/data association errors,

missing data or delayed data delivery, clock synchronisation errors, inappropriate

use of default values, use of dual workflows (paper and electronic), copying

and pasting of older information into a new report, and even basic data entry

errors.

Some of the mechanisms by which the information in an EHR or other health IT system could become compromised:

• Patient/data association errors

• Missing data or delayed data delivery

• Clock synchronisation errors

• Inappropriate use of default values

• Maintaining hybrid (paper and electronic) workflows

• Copying and pasting older information into a new report

• Data entry errors, e.g. entering incorrect information, selecting wrong item from a drop down menu, or entering information in the wrong field.

Recommendations

• Before implementing a new system

or modifying an existing one, assess the clinical workflow to understand how

the data is (or will be) used by frontline staff, and identify inefficiencies as

well as any potential error sources.

• Test, test, and retest

• Phase out paper.

• Provide comprehensive user training.

• Provide support during and after implementation.

• Facilitate problem

Neglecting Change Management for Networked Devices and Systems (no. 7)

The growing inter relationship between

medical technology and IT offers significant benefits. However, one

underappreciated consequence of system interoperability is that updates,

upgrades, or modifications made to one device or system can have unintended

effects on other connected devices or systems. ECRI Institute is aware of

incidents in which planned and proactive changes to one device or system—relating,

for example, to upgrading software and systems, improving wireless networks, or

addressing cyber security threats—have adversely affected other networked

medical devices and systems. To prevent such downstream effects, alterations to

a network or system must be performed in a controlled manner and with t he full

knowledge of the personnel who manage or use the connected systems.

Unfortunately, change management—a structured approach for completing such

alterations—appears to be an underutilszed practice.

Discussion

In today’s hospitals, initiatives

that once may have been considered ‘IT projects’ must instead be viewed as ‘clinical

projects that require IT expertise.’ Software upgrades, security patches,

server modifications, changes to or replacement of network hardware, and other

system changes can adversely affect patient care if not implemented in a way

that accommodates both IT and medical technology needs. Consider the following

examples:

• An ECRI Institute member hospital described an incident in which a facility-wide PC operating system upgrade caused the loss of remote-display capability for its foetal monitoring devices. The facility had configured its foetal monitoring system so that nurses could view the output on a PC located outside the patient’s room. However, these displays became non functional when the IT department pushed out a Windows 7 upgrade to the computers connected to the network. The PC application that allowed the display of the foetal monitor information was not compatible with Windows 7.

• Another member hospital experienced problems displaying foetal monitor data on workstations at the nurses’ station following an IT change. In this case, the problems began after the IT department moved the obstetrical data management system server offsite. No verification testing was performed to ensure continued performance after the change.

• An update to the firmware for the wireless access points at a member hospital caused the loss of wireless functionality for some of the facility’s medical devices. Some physiologic monitors, for example, required a wired connection for months until a fix could be implemented.

• A recent article describes an incident in which an EHR software upgrade resulted in changes to certain radiology reports, causing fields for the date and time of the study to drop from the legal record. The fields remained in the screen display, so staff using the EHR system did not detect the change to the legal record. (See the June 2013 edition of ECRI Institute’s Risk Management Reporter for details.) Appropriate change management policies and procedures, as outlined in the recommendations below, can help minimise the risks. Just as important, however, is to cultivate an environment in which IT, clinical engineering, and nursing/medical personnel (1) are aware of how their work affects other operations, patient care, and work processes—particularly clinical work processes—and (2) are able to work together to prevent IT-related changes from adversely affecting networked medical devices and systems.

Recommendations

Effective approaches to change

management include the following:

• Facilitate good working relationships among departments that have a direct responsibility for health IT systems, medical technology, and change management. Involve the appropriate stakeholders when changes are planned.

• Maintain an inventory listing the interfaced devices and systems present within the institution, including the software versions and configurations of the various interfaced components.

• Take steps to ensure that changes are assessed, approved, tested, and implemented in a controlled manner. ECRI Institute recommends that, when possible, the changes and associated system functionality be tested and verified in a test environment before implementation in a live clinical setting. Change management applies to a variety of actions, including hardware upgrades, soft ware upgrades, security changes, new applications, new work processes, and planned maintenance.

• Evaluate the facility’s policies and procedures regarding change management. Care should be taken to determine how technology decisions involving health IT systems, medical devices, and IT networks can affect current operations, patient care, and clinician work processes.

• Develop contract wording that is specific to change management. For example, contracts with vendors should require the necessary documents (e.g., revised specifications, software upgrade documentation, test scenarios) to be provided to the appropriately designated staff member(s) to facilitate change management. Stipulating that vendors provide advance notice of impending changes can give healthcare facilities time to budget and adequately plan for those changes.

• Ensure that any system updates do not jeo pardise processes to maintain the privacy of patient’s protected health information and the security of records with that information.

• When making changes to interfaced systems, closely monitor the systems after the change is made to ensure their safe and effective performance.

• Provide front line staff members a point of contact for reporting problems related to change management and health IT systems. Education, training, and good escalation procedures (so that reports reach someone who can respond if the first person is unavailable or lacks the necessary competence) can help to ensure that problems are addressed with the appropriate urgency. In addition, consider applying risk management principles to change management as discussed in the IEC 80001-1 standard, Application of Risk Management for IT-Networks Incorporating Medical Devices— Part 1: Roles, Responsibilities and Activities.