Leaders see AI acumen as crucial, but aren’t confident in their personal or organization’s ability to embrace the technology today.

In the six months since we first asked leaders about generative artificial intelligence (GenAI), confidence in the technology’s potential has remained high.

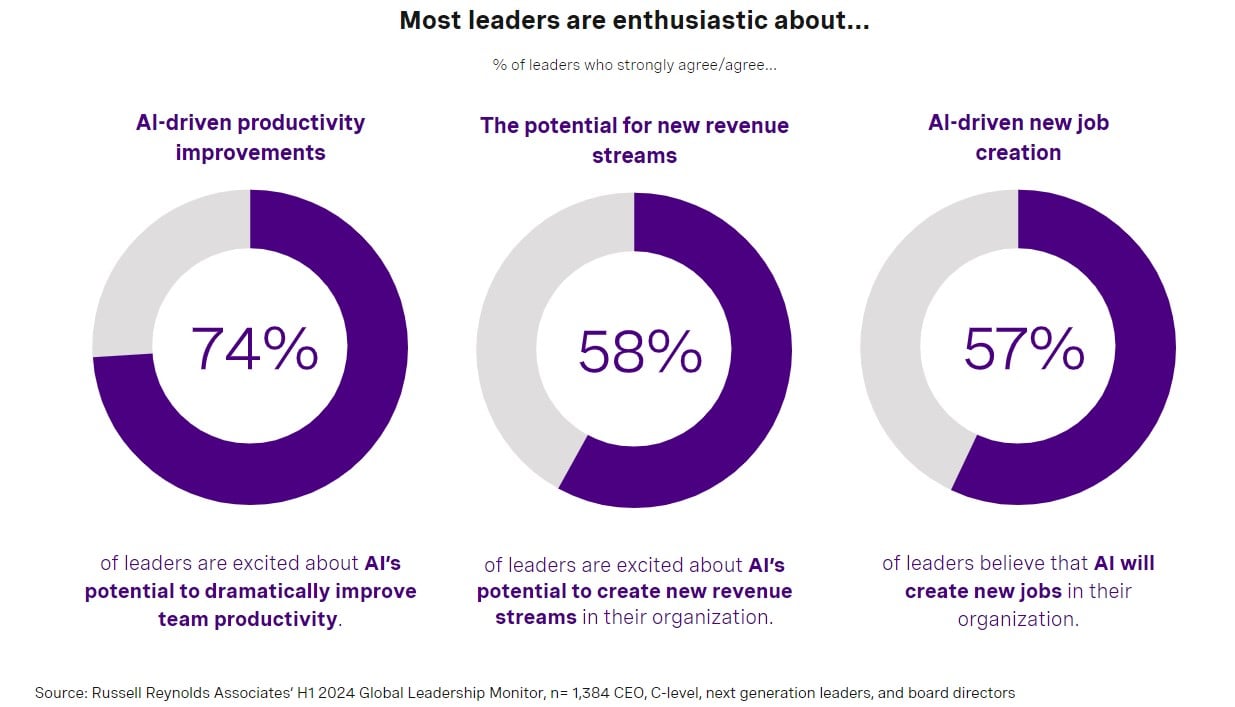

According to Russell Reynolds Associates’ most recent Global Leadership Monitor, there’s significant enthusiasm around how AI might revolutionize organizations, with 74% of leaders reporting excitement around AI’s potential to dramatically improve team productivity, and 58% believing in AI’s potential to create new revenue streams in their organization (Figure 1).

And while it’s impossible to avoid wondering about the potential downstream impacts on workforces, leaders are far more likely to believe that AI will create new jobs in their organizations than they are to be worried about AI causing layoffs (57% vs 15% strongly agree/agree).

However, This Optimism Falters When Leaders Consider the Realities of their Organization’s Capabilities

Regardless of whether this is increasing pessimism or a natural low point in the AI learning curve, it’s clear that leaders are noting significant gaps in their organization’s data quality, processes, and talent capabilities to guide AI use that’s both impactful and ethical.

Poor Data Quality and AI Processes

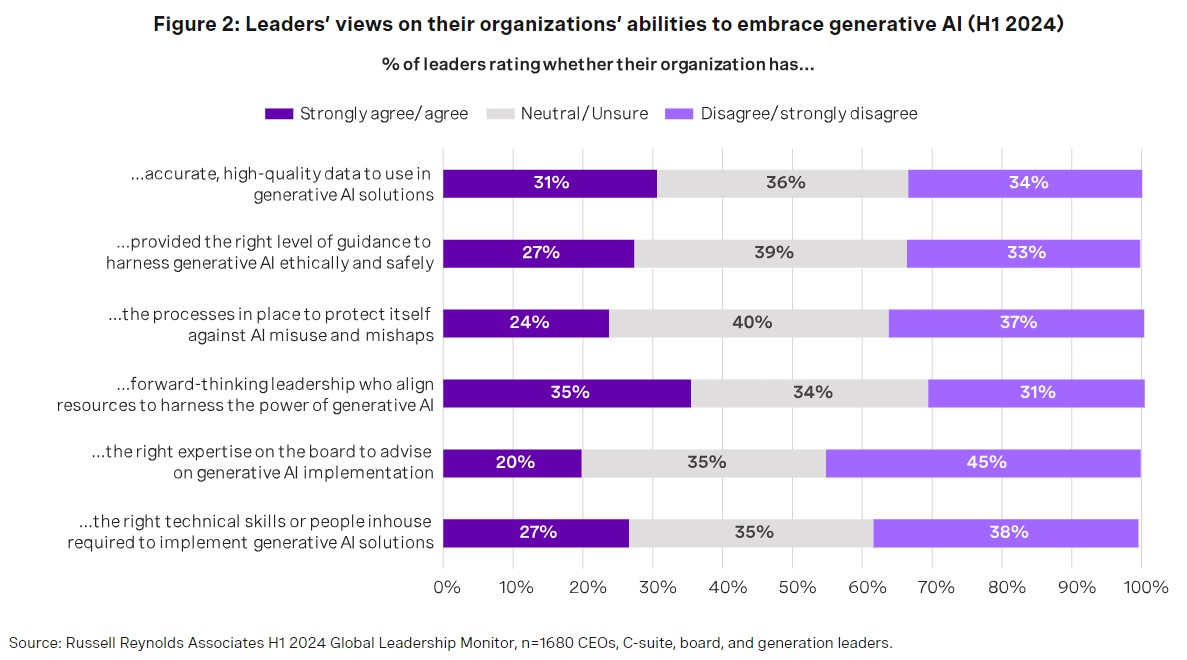

Leaders lack confidence in their organization’s data capabilities, with 31% agreeing that their organization has accurate, high-quality data to use in GenAI solutions, while 34% disagree (Figure 2). And, leaders are even less optimistic about their organization’s accompanying AI processes and training, as only 27% agree that their organization has provided the right level of guidance to harness GenAI ethically and safely, and only 24% agree that they have the right processes in place to protect against AI misuse.

|

Dismal Views on Talent’s AI Capabilities

Only a small portion of leaders are confident in their leaders’, employees’, and board’s ability to embrace GenAI. This concern is especially pronounced at board level, as only 20% of all leaders agree that their organization has the right expertise on the board to advise on generative AI implementation (Figure 2).

Compared to six months ago, fewer leaders agree that they have the right technical skills or people in-house to implement GenAI solutions, falling from 33% to 27%. This is likely a function of leaders learning more about AI’s complexities and, by extension, recognizing the gap between current state and future requirements to realistically harness the technology.

Leaders Also Lack Confidence in their Personal AI Skills

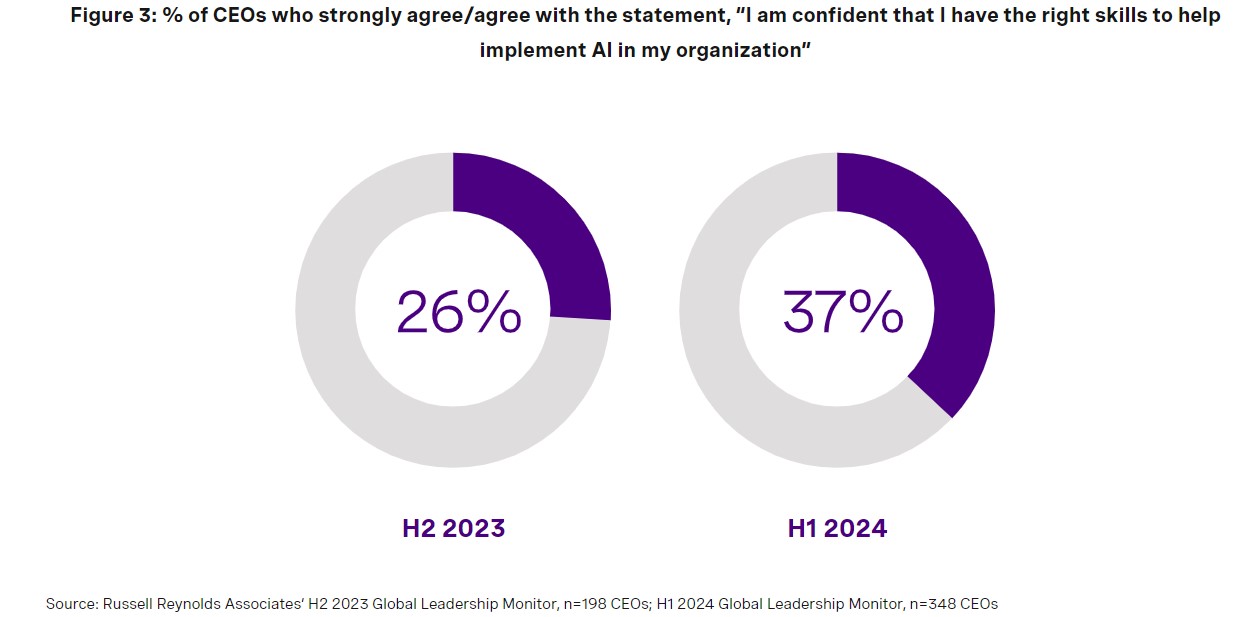

These harsh views aren’t sequestered to leaders’ views of each other—they also aren’t feeling particularly confident in themselves. In fact, while 76% of leaders believe that a strong understanding of GenAI will be required for future C-suite members, only 37% of all leaders are confident that they personally have the right skills to implement AI in their organizations.

This confidence level has remained relatively flat, increasing five percentage points over the last six months.

The one exception? CEOs. While six months ago, only 26% of CEOs reported confidence in their personal AI skills, this has jumped to 37%—meaning CEOs are gaining confidence more rapidly than their counterparts (Figure 3).

Given the nature of the CEO role, this initial skepticism makes sense. However, there’s been a major push to increase CEO exposure to and comfort with AI, which likely explains CEO’s recent confidence jump.

The Emerging AI Leadership Deficit

This gap is creating an AI leadership deficit, in which leaders see AI skills as crucial for the future but lack confidence in their personal or organization’s ability to embrace it today.

|

Not only does this create risk at the organizational level, it has serious implications for responsible AI implementation. When we asked leaders what they’ve done to ensure responsible AI use, they reported a wide range of actions—from leadership changes to tech investments to banning AI entirely. But within this range of responses, two notable groups arose:

- Those who created internal policies, processes, and trainings around responsible AI.

- Those who have done nothing to ensure responsible AI use.

Those who haven’t taken action account for 35% of respondents, making this the most common answer.

While you might expect this 35% of leaders to overlap with the 31% of leaders who haven’t taken any steps to implement GenAI, that’s actually not the case. Concerningly, 21% of those who haven’t taken any action on responsible AI have gone ahead and implemented AI in their organization.

And while 21% of 35% of leaders (effectively 7%) may not seem worth commenting on, consider that this 7% represents early AI adopters, and may have a disproportionate effect on shaping the understanding of and approaches to AI in organizations. So while they represent a small portion of the leaders we surveyed, it’s likely that this population has an outsized impact on the way we think about and interact with AI.

Six Recommendations for an AI-Enabled Business Transformation

To bridge the gap between this future AI optimism and current practical concerns, consider the following:

| 1. | Your leadership team needs to match your business transformation ambitions. |

| The rise of AI isn’t just about implementing new tools—we are in the realm of full business model transformation. As AI brings a seismic shift to the way we work, public and private sector leaders need to rethink how their organizations fundamentally operate. AI strategy loses its meaning without a capable execution team. As such, it’s key to have a clear-eyed view on which capabilities your organization has in-house, where any gaps may occur, and how your talent strategy needs to adapt to your future-state goals. | |

| 2. | This isn’t a mission for the ‘Hero CEO’—take a team approach to AI ownership, literacy, and ethics. |

| It’s hard for corporate leaders to think about the time horizons AI requires, especially when they aren’t digital natives. As such, they need a team of people around them to lighten this load and weigh in on how AI can factor into their organizations. An AI strategy isn’t complete without accompanying accountability, and that accountability can’t be managed by one person. Provide clarity around who drives strategy, who is responsible for day-to-day execution, and AI’s specific value creation opportunities. | |

| 3. | Rather than looking to early AI adopters, develop an AI strategy rooted in responsible principles that align with how your organization functions and your intended AI usage. |

| Guidance like the NIST AI Risk Management Framework provides recommendations for ethically implementing AI across industries. Once you’ve decided which framework best suits your needs, develop clear documentation around AI usage, your organization’s responsible AI principles, and how you’ll audit both. | |

| 4. | Create sunnier views around your ethical AI implementation by demonstrating a commitment to managing culture. |

| We’ve heard countless musings about how organizations will deploy AI—and whether those use cases will have a positive or negative personal impact on people. To address this, commit to actively managing your organization’s culture. Our data shows that when leaders believe that their senior leadership teams are taking active ownership of their organization’s culture, they are twice as likely to agree that their organization has provided the right level of guidance to harness generative AI ethically and safely. Create clear communication plans around your internal and external AI use cases and policies, upskilling and reskilling programs, and how AI will drive long-term impact. | |

| 5. | Consider an AI ethics leader to oversee a diverse team of thought leaders and advise the board and C-suite. |

| Establishing and maintaining ethical AI policies isn’t a one-person job—it would be near-impossible to find someone with this range of skills, and the field is changing too quickly for one person to keep up with. An AI ethics leader can oversee a team that brings technical, regulatory, industry, and organizational knowledge, as well as business-savvy communications skills, and cross-organizational partnerships. | |

| 6 | Look for—and strive to be—a well-rounded leader. |

| Today, most companies don’t have one specific leader whose primary focus is to identify and address AI issues across the enterprise. What’s more, the people involved with AI tend to be experts in subjects such as technology, business, law, and regulatory compliance and lack expertise in subjects like psychology, sociology, and philosophy, which are essential to tackling business and ethical issues effectively. In an AI-enabled future, leaders who embrace seemingly opposing schools of thought—for example, engineers who have studied philosophy and have learned to develop crisp mental models—will be better equipped to elevate their thinking, effectively leverage these new tools, and deploy human-centered judgment, creativity, and strategic thinking. |

Authors

Fawad Bajwa leads Russell Reynolds Associates’ AI, Analytics & Data Practice globally. He is based in Toronto and New York.

Leah Christianson is a member of Russell Reynolds Associates’ Center for Leadership Insight. She is based in San Francisco.

Tom Handcock leads Russell Reynolds Associates’ Center for Leadership Insight. He is based in London.

George Head leads Russell Reynolds Associates’ Technology Officers Knowledge team. He is based in London.

Tristan Jervis leads Russell Reynolds Associates’ Technology practice. He is based in London.

Tuck Rickards is a senior member and former leader of Russell Reynolds Associates’ Technology practice. He is based in San Francisco.

The authors wish to thank the 2,700+ leaders from RRA’s global network who completed the H1 2024 Global Leadership Monitor. Their responses to the survey have contributed greatly to our understanding of leadership in 2024 and beyond.