Whole slide images in digital pathology capture detailed tissue morphology but their gigapixel scale creates computational and annotation barriers for routine automation. A multimodal approach that combines these images with information contained in pathology reports promises gains in accuracy and consistency without exhaustive pixel-level labels. MPath-Net, an end-to-end framework that fuses image features from multiple-instance learning with text embeddings from transformer models, targets tumour subtype classification across kidney and lung cancers using publicly available data. Reported performance improvements over established unimodal and multimodal baselines underscore the value of integrating visual and textual signals for decision support in cancer diagnostics and workflow efficiency.

Dataset and Clinical Task

The framework is evaluated on a curated selection from The Cancer Genome Atlas encompassing 1684 cases, with 916 kidney and 768 lung records, spanning five disease types: Kidney Renal Clear Cell Carcinoma, Kidney Renal Papillary Cell Carcinoma, Kidney Chromophobe, Lung Adenocarcinoma and Lung Squamous Cell Carcinoma. Patient-level splits allocate 70% for training, 10% for validation and 20% for testing, fixed across experiments to ensure consistent comparisons. Only pathology reports that were clear and readable in PDF format were retained, and optical character recognition was used to create a clean, machine-readable corpus. The task is multi-class subtype classification, aligning with clinical aims such as tailoring therapies, guiding monitoring and reducing variability in interpretation.

WSIs are preprocessed into tissue-containing patches and filtered to remove background using an edge-based criterion. Multiple-instance learning treats each slide as a bag of instances, enabling weak supervision when patch-level labels are unavailable. In this context, the slide label supervises learning while the model reasons over patch embeddings to infer slide-level subtype. This setting mirrors clinical practice where image patterns and narrative reports are considered together to delineate tumour characteristics and support subtype assignment.

Model Design and Training

MPath-Net adopts a feature-level fusion strategy. On the imaging pathway, a Dual-Stream Multiple Instance Learning architecture with a ResNet-18 backbone produces a 512-dimensional slide representation from tissue patches. The final classification layer of the MIL network is removed, and embeddings from the aggregator are used as image features. On the text pathway, pathology reports are embedded with transformer models. A Sentence-BERT encoder delivers 768-dimensional representations that are projected via a trainable multilayer perception to 512 dimensions to align with the image feature space. The concatenated 1024-dimensional vector passes through fully connected layers with normalisation, rectified linear activations and dropout before softmax output over five classes. During end-to-end training, the image encoder, fusion layers and classifier are optimised while the text encoder remains frozen to preserve domain representations.

Must Read: Sustainable AI Benchmarking in Histopathology

Training uses Adam with a learning rate of 0.0001 and a batch size of 1 on A100 GPUs. Evaluation adopts accuracy, precision, recall, F1-score and area under the receiver operating characteristic curve, with 95% confidence intervals obtained by bootstrap resampling. To isolate the effect of fusion and architecture, all unimodal MIL baselines are trained with the same SimCLR-pretrained ResNet-18 backbone used in MPath-Net. Text encoders explored include BioBERT, ClinicalBERT, Clinical BioBERT, PathologyBERT and Sentence-BERT, the Sentence-BERT variant is selected for the primary model based on performance.

Performance and Interpretability

Across the five-class task, the Sentence-BERT-based variant achieves accuracy of 0.9487, precision of 0.9495, recall of 0.9445, F1-score of 0.9460 and AUC of 0.9902. These results exceed those of MIL baselines including TransMIL, ACMIL, ABMIL, MaxMIL and MeanMIL, with p-values consistently below 0.05 for accuracy, precision, recall and F1-score. Class-wise comparisons indicate leading performance in three of five classes. The multimodal model also surpasses unimodal image-only and text-only configurations across the main threshold-based metrics, while a comparison notes that the image-only variant can attain a slightly higher AUC, reflecting a trade-off between ranking capacity and thresholded classification when textual features are incorporated.

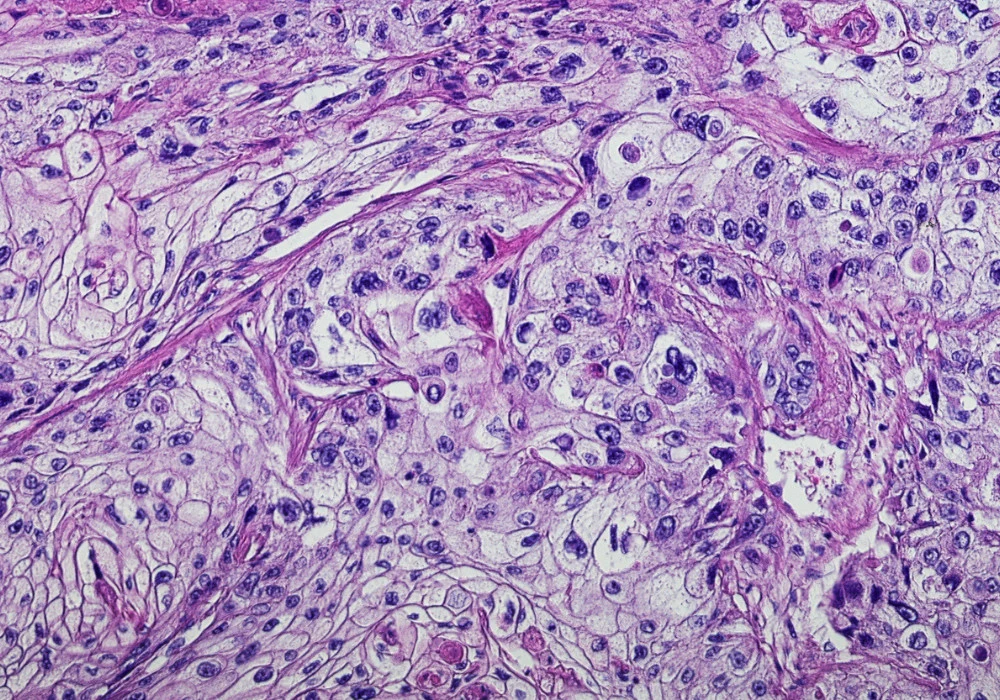

Interpretability is addressed through attention heatmaps that highlight regions of interest at patch level on whole slides. Normalised attention scores mapped to colours help localise tumour-suggestive tissue, offering a visual explanation aligned with pathologist reviews. Illustrations compare attention patterns from baseline MIL models with the multimodal output, showing concentrated regions where tumour evidence is strongest. This transparency supports potential clinical utility by aligning model focus with tissue areas that drive classification decisions.

An end-to-end multimodal framework that fuses weakly supervised whole-slide embeddings with transformer-based report features improves tumour subtype classification for kidney and lung cancers within a large public cohort. By maintaining a shared representation space for images and text and training the visual pathway with fusion layers jointly, MPath-Net advances accuracy and consistency over established MIL baselines and unimodal counterparts. With statistically significant gains across core metrics and attention-based visualisations that expose slide regions underpinning predictions, the approach illustrates how integrating complementary data streams can support pathologists, reduce inter-reader variability and align with precision oncology goals.

Source: Journal of Healthcare Informatics Research

Image Credit: iStock