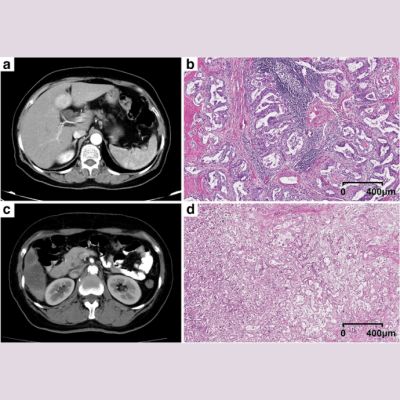

In a recent study conducted at the Icahn School of Medicine at Mount Sinai in New York, researchers used machine learning (ML) techniques to identify clinical concepts in radiologist reports for CT scans (Zech et al. 2018).

These techniques include natural language processing algorithms and demonstrate an important first step in the development of artificial intelligence (AI) that could in time interpret scans and diagnose conditions. Researchers trained the computer software using 96,303 radiologist reports associated with head CT scans performed at The Mount Sinai Hospital and Mount Sinai Queens between 2010 and 2016. Examples of terminology included words like phospholipid, heartburn, and colonoscopy. To reflect the "lexical complexity" of radiologist reports, researchers calculated metrics that reflected the variety of language used in these reports and compared these to other large collections of text: thousands of books, Reuters news stories, inpatient physician notes and Amazon product reviews.

First author John Zech, a medical student at the Icahn School of Medicine at Mount Sinai, commented in a media release: "Deep learning has many potential applications in radiology—triaging to identify studies that require immediate evaluation, flagging abnormal parts of cross-sectional imaging for further review, characterizing masses concerning for malignancy—and those applications will require many labeled training examples."

Despite the mixed views about AI in the field, it could one day help radiologists interpret x-rays, computed tomography (CT) scans, and magnetic resonance imaging (MRI) studies. However, in order for the technology to be effective in the medical arena, computer software must be "taught" the difference between a normal study and abnormal findings. The study carried out was initiated to help to train this technology how to understand text reports written by radiologists, which includes a series of algorithms set up in order to teach the computer clusters of phrases.

When asked how long it will take before computer software will be able to tell the difference between a normal study and an abnormal finding, Dr. Eric Oermann, Instructor in the Department of Neurosurgery at the Icahn School of Medicine at Mount Sinai explained to HealthManagement in an email: “I've only been a physician for five years, and I'm still amazed by the variation in "normal" that I encounter every single day. Part of the challenge is that this depends on how you phrase the problem, what population you're working with, what imaging modality you're working with—are you concerned with findings or diagnoses for example? In some cases, like segmentation (see the latest Multimodal Brain Tumour Segmentation Challenge [BraTS: braintumorsegmentation.org] and Ischaemic Stroke Lesion Segmentation [ISLES] results: isles-challenges.org) this is already done pretty well in a research setting, but in short we still have a lot of work to do.”

"Machine learning as a whole

is neither artificial nor intelligent. Modern machine learning and deep

learning are really just plain old linear algebra and calculus combined with

modern high-performance computing,” Dr. Oermann explained, adding: “I think the

modern successes in machine learning have helped spur our thinking in terms of

what mechanistically constitutes intelligence, but we really have so much to

learn, both in terms of the fundamentals of machine learning and of

neuroscience.”

Deep learning describes a subcategory of ML that uses multiple layers of neural networks (computer systems that learn progressively) to perform inference, which requires large amounts of training data. Techniques used in this study led to an accuracy of 91 percent, demonstrating that it is possible to automatically identify concepts in text from the complex domain of radiology.

Machine learning models are "technically" a type of AI, but actually can be quite helpful, according to Dr. Onermann. Expert-driven machine learning systems can be used as part of a student-teacher or weakly supervised learning setup. In such a framework, the machine learning algorithm "teacher" trains the deep learning algorithm "student" and is eventually surpassed. Ronald Summers of the National Institutes of Health Clinical Center actually does this with a segmentation task in his latest research, and learning from weakly supervised labels is a big part of the impressive body of work from Christopher Ré at Stanford University.

Indeed, it appears that this type of research may turn big data into useful data and is the first step in harnessing the power of AI to help patients. Dr. Onermann expressed his hope that ML can make things better, safer, faster, and—most of all— more patient-centric.

Source: Radiology

Image Credit: Pixabay